擴散模型是實時遊戲引擎

作者: Dani Valevski(谷歌研究)、Yaniv Leviathan(谷歌研究)、Moab Arar(特拉維夫大學)、Shlomi Fruchter(谷歌 DeepMind)

ArXiv連結: https://arxiv.org/abs/2408.14837

項目網站: https://gamengen.github.io

摘要

我們介紹了GameNGen,這是第一個完全由神經模型驅動的遊戲引擎,能夠在長軌跡上與複雜環境進行高質量的實時交互。GameNGen 可以在單個 TPU 上以每秒超過 20 幀的速度交互模擬經典遊戲 DOOM。下一幀預測的 PSNR 為 29.4,與有損 JPEG 壓縮相當。在區分遊戲短片和模擬片段方面,人類評分員的表現僅略好於隨機概率。GameNGen 的訓練分為兩個階段:(1) 一個強化學習代理學習玩遊戲,並記錄訓練過程;(2) 訓練一個擴散模型,以過去的幀和動作序列為條件生成下一幀。條件增強技術可在長軌跡上實現穩定的自動回歸生成。

請參見 https://gamengen.github.io 獲取演示視頻。

1 介紹

計算機遊戲是圍繞以下「遊戲循環」手動製作的軟件系統:(1) 收集用戶輸入,(2) 更新遊戲狀態,(3) 將其渲染為屏幕像素。這個遊戲循環以很高的幀率運行,為玩家營造出一個交互式虛擬世界的假象。這種遊戲循環通常在標準計算機上運行,儘管也有許多在定製硬件上運行遊戲的驚人嘗試(例如,標誌性遊戲《毀滅戰士》曾在烤麵包機、微波爐、跑步機、照相機、iPod 上運行,甚至在 Minecraft 遊戲中運行——僅舉幾例,請參見 https://www.reddit.com/r/itrunsdoom/),但在所有这些情况下,硬件仍然是直接模拟手动编写的游戏软件。此外,尽管游戏引擎千差万别,但所有引擎中的游戏状态更新和渲染逻辑都是由一套手动编程或配置的规则组成的。

近年來,生成模型在根據文本或圖像等多模態輸入生成圖像和視頻方面取得了重大進展。在這一浪潮的前沿,擴散模型成為非語言媒體生成的事實標準,如 Dall-E(Ramesh 等人,2022)、Stable Diffusion(Rombach 等人,2022)和 Sora(Brooks 等人,2024)。乍一看,模擬視頻遊戲的交互世界似乎與視頻生成類似。然而,"交互式"世界模擬不僅僅是快速生成視頻。因為生成過程中需要以輸入動作流為條件,而輸入動作流只能在生成時獲取,這打破了現有擴散模型架構的一些假設。尤其是,它要求自回歸地生成幀,這往往是不穩定的,並導致採樣發散(見 3.2.1 節)。

有幾項重要研究(Ha & Schmidhuber,2018;Kim 等人,2020;Bruce 等人,2024)(見第6節)使用神經模型來模擬交互式視頻遊戲。然而,這些方法大多在模擬遊戲的複雜性、仿真速度、長時間的穩定性或視覺質量等方面存在局限性(見圖2)。因此,自然而然地會問:

一個實時運行的神經模型是否能夠以高質量模擬複雜的遊戲?

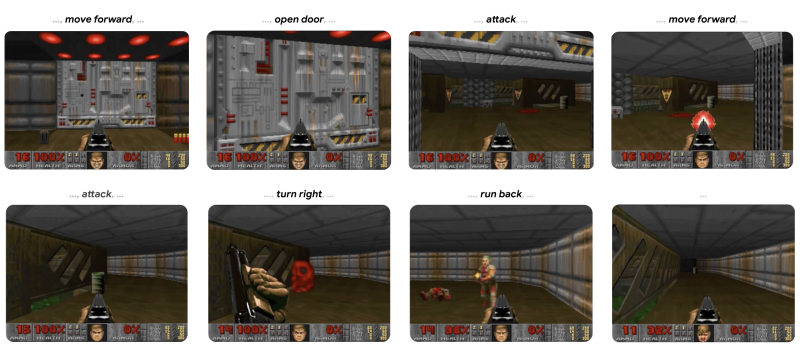

在這項工作中,我們證明答案是肯定的。具體來說,我們展示了一款複雜的視頻遊戲——標誌性遊戲《DOOM》,可以在神經網絡(開放式 Stable Diffusion v1.4 的增強版(Rombach 等人,2022))上實時運行,同時獲得與原始遊戲相當的視覺質量。儘管這不是精確仿真,該神經模型能夠執行複雜的遊戲狀態更新,例如統計生命值和彈藥、攻擊敵人、破壞物體、開門,以及在長軌跡上持續保持遊戲狀態。

GameNGen 回答了在通往遊戲引擎新範式的道路上一個重要的問題,即遊戲可以自動生成,就像近年來神經模型生成圖像和視頻一樣。仍然存在關鍵問題,例如如何訓練這些神經遊戲引擎,以及如何有效地創建遊戲,包括如何最佳地利用人類輸入。儘管如此,我們對這種新範式的可能性感到非常興奮。

2 互動世界仿真

一個交互環境由一個潛在狀態空間Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{S}} 、一個潛在空間的部分投影空間Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{O}} 、一個部分投影函數Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle V: \mathcal{S} \rightarrow \mathcal{O}} 、一組動作Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{A}} ,以及一個轉移概率函數Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle p \left( s^{\prime} \,|\, a, s \right)} ,使得Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s, s^{\prime} \in \mathcal{S}, a\in \mathcal{A}} 。

例如,在遊戲 DOOM 中,Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{S}} 是程序的動態內存內容,Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{O}} 是渲染的屏幕像素,Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle V} 是遊戲的渲染邏輯,Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{A}} 是按鍵和鼠標移動的集合,而 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle p} 是基於玩家輸入的程序邏輯(包括任何潛在的非確定性)。

給定輸入交互環境 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{E}} 和初始狀態 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s_{0} \in \mathcal{S}} ,一個「交互世界模擬」是一個「模擬分佈函數」 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle q \left( o_{n} \,|\, \{o_{< n}, a_{\leq n}\} \right), \; o_{i} \in \mathcal{O}, \; a_{i} \in \mathcal{A}} 。給定觀測值之間的距離度量 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle D: \mathcal{O} \times \mathcal{O} \rightarrow \mathbb{R}} ,一個「策略」,即給定過去動作和觀測的代理動作分佈 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \pi \left( a_{n} \,|\, o_{< n}, a_{< n} \right)} ,初始狀態分佈 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle S_{0}} 和回合長度分佈 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle N_{0}} ,交互世界模擬的目標是最小化 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle E \left( D \left( o_{q}^{i}, o_{p}^{i} \right) \right)} ,其中 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle n \sim N_{0}} ,Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0 \leq i \leq n} ,以及 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle o_{q}^{i} \sim q, \; o_{p}^{i} \sim V(p)} 是在執行代理策略 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \pi} 時從環境和模擬中抽取的觀測值。重要的是,這些樣本的條件動作總是通過代理與環境 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{E}} 交互獲得,而條件觀測既可以從 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{E}} 獲得(「教師強迫目標」),也可以從模擬中獲得(「自回歸目標」)。

我們總是使用教師強迫目標來訓練我們的生成模型。給定一個模擬分佈函數 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle q} ,可以通過自回歸地採樣觀測值來模擬環境 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{E}} 。

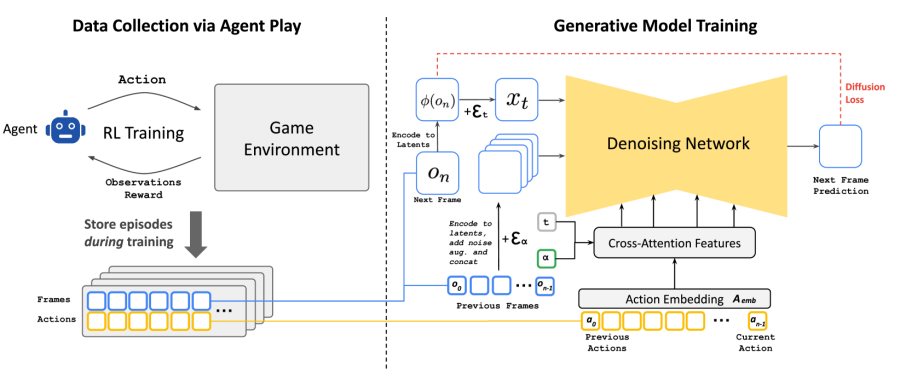

3 GameNGen

GameNGen(發音為「遊戲引擎」)是一個生成擴散模型,它能夠在第2節的設置下學習模擬遊戲。為了收集該模型的訓練數據,我們首先使用教師強制目標訓練一個獨立的模型與環境進行交互。這兩個模型(代理和生成模型)依次進行訓練。在訓練過程中,代理的全部行為和觀察語料 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{T}_{agent}} 被保留下來,並在第二階段成為生成模型的訓練數據集。見圖 3。

3.1 通過代理進行數據收集

我們的最終目標是讓人類玩家與我們的仿真進行互動。為此,第2節中的策略Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \pi} 即為「人類遊戲策略」。由於我們無法直接大規模地從中取樣,因此我們首先通過教一個自動代理來玩遊戲,以此來近似人類遊戲。與典型的強化學習設置不同,該設置旨在最大化遊戲得分,我們的目標是生成與人類遊戲類似的訓練數據,或者至少在各種場景下包含足夠多的多樣化示例,以最大化訓練數據的效率。為此,我們設計了一個簡單的獎勵函數,這是我們的方法中唯一與環境相關的部分(見附錄A.3)。

我們在整個訓練過程中記錄了代理的訓練軌跡,其中涵蓋了不同技能水平的遊戲。這組記錄的軌跡構成了我們的Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{T}_{agent}} 數據集,用於訓練生成模型(見第3.2節)。

3.2 訓練生成擴散模型

現在,我們訓練一個生成擴散模型,該模型以在前一階段收集的代理軌跡Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{T}_{agent}} (行動和觀察)作為條件。

我們重新利用預訓練的文本到圖像擴散模型 Stable Diffusion v1.4(Rombach 等人,2022)。我們將模型 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f_{\theta}} 置於軌跡 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle T \sim \mathcal{T}_{agent}} 的條件下,即在之前的動作 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle a_{< n}} 和觀察(幀) Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle o_{< n}} 的序列條件下,並移除所有文本條件。具體來說,為了以動作為條件,我們僅需學習將每個動作(例如按下特定按鍵)嵌入為單個標記的 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle A_{emb}} ,並將文本的交叉注意力替換為該編碼動作序列。為了對觀察(即之前的幀)進行條件化,我們使用自動編碼器 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \phi} 將它們編碼到潛在空間中,並在潛在通道維度中將它們串聯到噪聲潛在空間中(見圖 3)。我們還嘗試通過交叉注意力對這些過去的觀察進行條件化,但沒有觀察到有意義的改進。

我們通過速度參數化訓練模型,使得擴散損失最小化(Salimans & Ho, 2022b):

Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{L} = {{\mathbb{E}}_{t,\epsilon,T}\left\lbrack {\|{v{(\epsilon,x_{0},t)}} - {v_{\theta^{\prime}}{(x_{t},t,\{{\phi{(o_{i < n})}}\},\{{A_{emb}{(a_{i < n})}}\}})}}\|}_{2}^{2} \right\rbrack}} (1)

其中 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle T = {\{ o_{i \leq n},a_{i \leq n}\}} \sim \mathcal{T}_{代理}} ,Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_{0} = \phi{(o_{n})}} ,Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle t \sim \mathcal{U}{(0,1)}} ,Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \epsilon \sim \mathcal{N}{(0,\mathbf{I})}} ,Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_{t} = {\sqrt{\overline{\alpha}_{t}}x_{0} + \sqrt{1 - \overline{\alpha}_{t}}\epsilon}} ,Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle v{(\epsilon,x_{0},t)} = {\sqrt{\overline{\alpha}_{t}}\epsilon - \sqrt{1 - \overline{\alpha}_{t}}x_{0}}} ,而 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle v_{\theta^{\prime}}} 是模型 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f_{\theta}} 的 v預測輸出。噪聲調度 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \overline{\alpha}_{t}} 是線性的,與 Rombach 等(2022)類似。

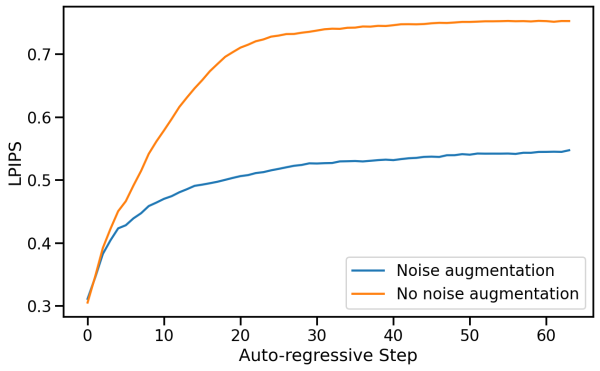

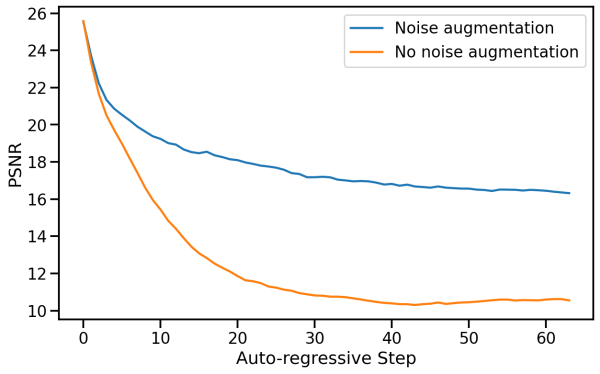

3.2.1 使用噪聲增強緩解自回歸漂移

如圖4所示,教師強制訓練和自動回歸採樣之間的領域偏移會導致誤差積累和採樣質量的快速下降。為了避免由於模型的自動回歸應用而導致的這種偏差,我們在訓練時向編碼幀中添加不同程度的高斯噪聲來擾動背景幀,並將噪聲水平作為輸入提供給模型,仿效 Ho 等人(2021)的方法。為此,我們對噪聲水平 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha} 進行均勻採樣,直至最大值,然後對其進行離散化,並為每個區間學習一個嵌入(見圖3)。這使得網絡能夠糾正前幾幀中的採樣信息,對於長期保持幀質量至關重要。在推理過程中,可以控制添加的噪聲水平以最大化質量,儘管我們發現,即使不添加噪聲,結果也顯著改善。我們將在5.2.2部分分析這種方法的影響。

3.2.2 潛在變量解碼器微調

Stable Diffusion v1.4 的預訓練自動編碼器將 8x8 像素塊壓縮為 4 個潛通道,在預測遊戲幀時會導致有意義的偽影,影響小細節,尤其是底欄 HUD(「抬頭顯示」)。為了在提高圖像質量的同時利用預訓練的知識,我們僅使用針對目標幀像素計算的 MSE 損失來訓練潛在自動編碼器的解碼器。使用 LPIPS(Zhang 等人(2018))等感知損失可能會進一步提高質量,我們將其留待未來工作中研究。重要的是,請注意這個微調過程完全獨立於 U-Net 微調過程,而且自回歸生成不受其影響(我們僅對潛變量自回歸地進行條件設置,而非像素)。附錄 A.2 展示了對自動編碼器進行微調和不進行微調的生成示例。

3.3 推理

3.3.1 設置

我們使用DDIM採樣(Song等人,2022)。我們僅對過去觀測條件Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle o_{< n}} 採用了無分類器指導(Ho & Salimans,2022)。我們發現對過去動作條件Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle a_{< n}} 的指導無法提高質量。我們使用的權重相對較小(1.5),因為較大的權重會產生偽影,而我們的自動回歸採樣則會放大這些偽影。

我們還嘗試了同時生成 4 個樣本並合併結果,希望能防止罕見的極端預測被採納,並減少誤差累積。我們嘗試了對樣本進行平均和選擇最接近中位數的樣本。平均效果略遜於單幀,而選擇最接近中位數的樣本效果僅略有提升。由於這兩種方法都會將硬件需求提高到 4 個張量處理單元(TPU),因此我們決定不使用這些方法,但注意到這可能是未來研究的一個有趣領域。

3.3.2 去噪器採樣步驟

在推理過程中,我們需要運行 U-Net 去噪器(進行若干步)和自動編碼器。在我們的硬件配置(TPU-v5)下,一次去噪步驟和自動編碼器的評估各需 10 毫秒。如果我們以單步去噪器運行模型,設置中的最小總延遲為每幀 20 毫秒,即每秒 50 幀。通常情況下,生成擴散模型(如 Stable Diffusion)通過單次去噪步驟無法產生高質量結果,而是需要數十個採樣步驟才能生成高質量圖像。令人驚訝的是,我們發現只需 4 個 DDIM 採樣步驟,就能穩健地模擬 DOOM(Song 等人,2020)。實際上,我們觀察到使用 4 步採樣與使用 20 步或更多步採樣相比,模擬質量沒有下降(見附錄 A.4)。

Using just 4 denoising steps leads to a total U-Net cost of 40ms (and total inference cost of 50ms, including the auto encoder) or 20 frames per second. We hypothesize that the negligible impact to quality with few steps in our case stems from a combination of: (1) a constrained images space, and (2) strong conditioning by the previous frames.

Since we do observe degradation when using just a single sampling step, we also experimented with model distillation similarly to (Yin et al., 2024; Wang et al., 2023) in the single-step setting. Distillation does help substantially there (allowing us to reach 50 FPS as above), but still comes at some cost to simulation quality, so we opt to use the 4-step version without distillation for our method (see Appendix A.4). This is an interesting area for further research.

We note that it is trivial to further increase the image generation rate substantially by parallelizing the generation of several frames on additional hardware, similarly to NVidia’s classic SLI Alternate Frame Rendering (AFR) technique. Similarly to AFR, the actual simulation rate would not increase and input lag would not reduce.

4 Experimental Setup

4.1 Agent Training

The agent model is trained using PPO (Schulman et al., 2017), with a simple CNN as the feature network, following Mnih et al. (2015). It is trained on CPU using the Stable Baselines 3 infrastructure (Raffin et al., 2021). The agent is provided with downscaled versions of the frame images and in-game map, each at resolution 160x120. The agent also has access to the last 32 actions it performed. The feature network computes a representation of size 512 for each image. PPO’s actor and critic are 2-layer MLP heads on top of a concatenation of the outputs of the image feature network and the sequence of past actions. We train the agent to play the game using the Vizdoom environment (Wydmuch et al., 2019). We run 8 games in parallel, each with a replay buffer size of 512, a discount factor Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \gamma = 0.99} , and an entropy coefficient of Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.1} . In each iteration, the network is trained using a batch size of 64 for 10 epochs, with a learning rate of 1e-4. We perform a total of 10M environment steps.

4.2 Generative Model Training

We train all simulation models from a pretrained checkpoint of Stable Diffusion 1.4, unfreezing all U-Net parameters. We use a batch size of 128 and a constant learning rate of 2e-5, with the Adafactor optimizer without weight decay (Shazeer & Stern, 2018) and gradient clipping of 1.0. We change the diffusion loss parameterization to be v-prediction (Salimans & Ho 2022a). The context frames condition is dropped with probability 0.1 to allow CFG during inference. We train using 128 TPU-v5e devices with data parallelization. Unless noted otherwise, all results in the paper are after 700,000 training steps. For noise augmentation (Section 3.2.1), we use a maximal noise level of 0.7, with 10 embedding buckets. We use a batch size of 2,048 for optimizing the latent decoder; other training parameters are identical to those of the denoiser. For training data, we use all trajectories played by the agent during RL training as well as evaluation data during training, unless mentioned otherwise. Overall, we generate 900M frames for training. All image frames (during training, inference, and conditioning) are at a resolution of 320x240 padded to 320x256. We use a context length of 64 (i.e., the model is provided its own last 64 predictions as well as the last 64 actions).

5 Results

5.1 Simulation Quality

Overall, our method achieves a simulation quality comparable to the original game over long trajectories in terms of image quality. For short trajectories, human raters are only slightly better than random chance at distinguishing between clips of the simulation and the actual game.

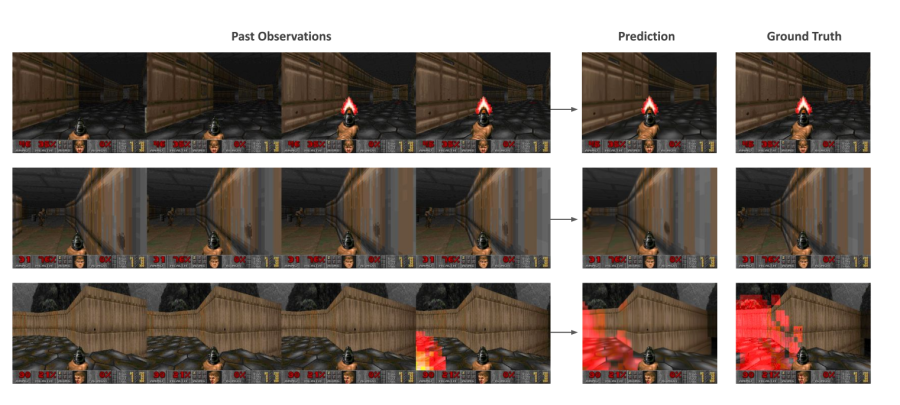

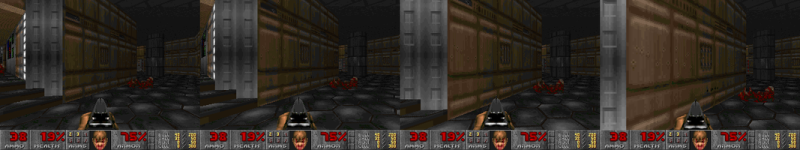

Image Quality. We measure LPIPS (Zhang et al., 2018) and PSNR using the teacher-forcing setup described in Section 2, where we sample an initial state and predict a single frame based on a trajectory of ground-truth past observations. When evaluated over a random holdout of 2048 trajectories taken in 5 different levels, our model achieves a PSNR of Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 29.43} and an LPIPS of Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.249} . The PSNR value is similar to lossy JPEG compression with quality settings of 20-30 (Petric & Milinkovic, 2018). Figure 5 shows examples of model predictions and the corresponding ground truth samples.

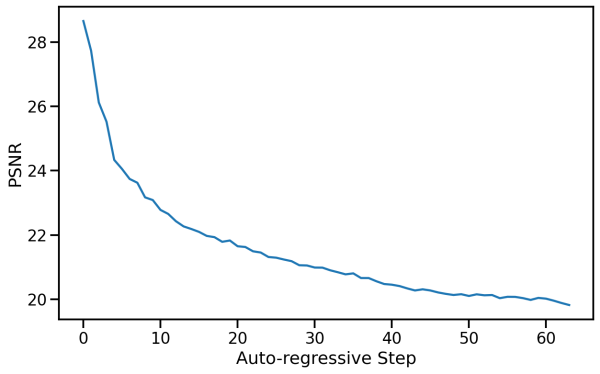

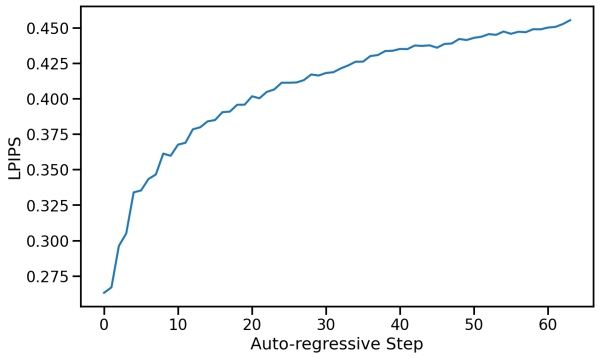

Video Quality. We use the auto-regressive setup described in Section 2, where we iteratively sample frames following the sequences of actions defined by the ground-truth trajectory, while conditioning the model on its own past predictions. When sampled auto-regressively, the predicted and ground-truth trajectories often diverge after a few steps, mostly due to the accumulation of small amounts of different movement velocities between frames in each trajectory. For that reason, per-frame PSNR and LPIPS values gradually decrease and increase respectively, as can be seen in Figure 6. The predicted trajectory is still similar to the actual game in terms of content and image quality, but per-frame metrics are limited in their ability to capture this (see Appendix A.1 for samples of auto-regressively generated trajectories).

We therefore measure the FVD (Unterthiner et al., 2019) computed over a random holdout of 512 trajectories, measuring the distance between the predicted and ground truth trajectory distributions, for simulations of length 16 frames (0.8 seconds) and 32 frames (1.6 seconds). For 16 frames, our model obtains an FVD of Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 114.02} . For 32 frames, our model obtains an FVD of Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 186.23} .

Human Evaluation. As another measurement of simulation quality, we provided 10 human raters with 130 random short clips (of lengths 1.6 seconds and 3.2 seconds) of our simulation side by side with the real game. The raters were tasked with recognizing the real game (see Figure 14 in Appendix A.6). The raters only choose the actual game over the simulation in 58% or 60% of the time (for the 1.6 seconds and 3.2 seconds clips, respectively).

5.2 Ablations

To evaluate the importance of the different components of our methods, we sample trajectories from the evaluation dataset and compute LPIPS and PSNR metrics between the ground truth and the predicted frames.

5.2.1 Context Length

We evaluate the impact of changing the number Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle N} of past observations in the conditioning context by training models with Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle N \in \{ 1,2,4,8,16,32,64\}} (recall that our method uses Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle N = 64} ). This affects both the number of historical frames and actions. We train the models for 200,000 steps keeping the decoder frozen and evaluate on test-set trajectories from 5 levels. See the results in Table 1. As expected, we observe that generation quality improves with the length of the context. Interestingly, we observe that while the improvement is large at first (e.g., between 1 and 2 frames), we quickly approach an asymptote and further increasing the context size provides only small improvements in quality. This is somewhat surprising as even with our maximal context length, the model only has access to a little over 3 seconds of history. Notably, we observe that much of the game state is persisted for much longer periods (see Section 7). While the length of the conditioning context is an important limitation, Table 1 hints that we’d likely need to change the architecture of our model to efficiently support longer contexts, and employ better selection of the past frames to condition on, which we leave for future work.

Table 1: Number of history frames. We ablate the number of history frames used as context using 8912 test-set examples from 5 levels. More frames generally improve both PSNR and LPIPS metrics.

| History Context Length | PSNR Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \uparrow} | LPIPS Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \downarrow} |

|---|---|---|

| 64 | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 22.36 \pm 0.033} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.295 \pm 0.001} |

| 32 | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 22.31 \pm 0.033} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.296 \pm 0.001} |

| 16 | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 22.28 \pm 0.033} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.296 \pm 0.001} |

| 8 | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 22.26 \pm 0.033} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.296 \pm 0.001} |

| 4 | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 22.26 \pm 0.034} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.298 \pm 0.001} |

| 2 | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 22.03 \pm 0.037} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.304 \pm 0.001} |

| 1 | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 20.94 \pm 0.044} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.358 \pm 0.001} |

5.2.2 Noise Augmentation

To ablate the impact of noise augmentation, we train a model without added noise. We evaluate both our standard model with noise augmentation and the model without added noise (after 200k training steps) auto-regressively and compute PSNR and LPIPS metrics between the predicted frames and the ground-truth over a random holdout of 512 trajectories. We report average metric values for each auto-regressive step up to a total of 64 frames in Figure 7.

Without noise augmentation, LPIPS distance from the ground truth increases rapidly compared to our standard noise-augmented model, while PSNR drops, indicating a divergence of the simulation from ground truth.

5.2.3 Agent Play

We compare training on agent-generated data to training on data generated using a random policy. For the random policy, we sample actions following a uniform categorical distribution that doesn’t depend on the observations. We compare the random and agent datasets by training 2 models for 700k steps along with their decoder. The models are evaluated on a dataset of 2048 human-play trajectories from 5 levels. We compare the first frame of generation, conditioned on a history context of 64 ground-truth frames, as well as a frame after 3 seconds of auto-regressive generation.

Overall, we observe that training the model on random trajectories works surprisingly well, but is limited by the exploration ability of the random policy. When comparing the single frame generation, the agent works only slightly better, achieving a PSNR of 25.06 vs 24.42 for the random policy. When comparing a frame after 3 seconds of auto-regressive generation, the difference increases to 19.02 vs 16.84. When playing with the model manually, we observe that some areas are very easy for both, some areas are very hard for both, and in some, the agent performs much better. With that, we manually split 456 examples into 3 buckets: easy, medium, and hard, manually, based on their distance from the starting position in the game. We observe that on the easy and hard sets, the agent performs only slightly better than random, while on the medium set, the difference is much larger in favor of the agent as expected (see Table 2). See Figure 13 in Appendix A.5 for an example of the scores during a single session of human play.

Table 2: Performance on Different Difficulty Levels. We compare the performance of models trained using Agent-generated and Random-generated data across easy, medium, and hard splits of the dataset. Easy and medium have 112 items, hard has 232 items. Metrics are computed for each trajectory on a single frame after 3 seconds.

| Difficulty Level | Data Generation Policy | PSNR Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \uparrow} | LPIPS Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \downarrow} |

|---|---|---|---|

| Easy | Agent | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 20.94 \pm 0.76} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.48 \pm 0.01} |

| Random | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 20.20 \pm 0.83} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.48 \pm 0.01} | |

| Medium | Agent | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 20.21 \pm 0.36} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.50 \pm 0.01} |

| Random | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 16.50 \pm 0.41} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.59 \pm 0.01} | |

| Hard | Agent | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 17.51 \pm 0.35} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.60 \pm 0.01} |

| Random | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 15.39 \pm 0.43} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.61 \pm 0.00} |

6 Related Work

Interactive 3D Simulation

Simulating visual and physical processes of 2D and 3D environments and allowing interactive exploration of them is an extensively developed field in computer graphics (Akenine-Möller et al., 2018). Game Engines, such as Unreal and Unity, are software that processes representations of scene geometry and renders a stream of images in response to user interactions. The game engine is responsible for keeping track of all world state, e.g., the player position and movement, objects, character animation, and lighting. It also tracks the game logic, e.g., points gained by accomplishing game objectives. Film and television productions use variants of ray-tracing (Shirley & Morley, 2008), which are too slow and compute-intensive for real-time applications. In contrast, game engines must keep a very high frame rate (typically 30-60 FPS), and therefore rely on highly-optimized polygon rasterization, often accelerated by GPUs. Physical effects such as shadows, particles, and lighting are often implemented using efficient heuristics rather than physically accurate simulation.

Neural 3D Simulation

Neural methods for reconstructing 3D representations have made significant advances over the last years. NeRFs (Mildenhall et al., 2020) parameterize radiance fields using a deep neural network that is specifically optimized for a given scene from a set of images taken from various camera poses. Once trained, novel points of view of the scene can be sampled using volume rendering methods. Gaussian Splatting (Kerbl et al., 2023) approaches build on NeRFs but represent scenes using 3D Gaussians and adapted rasterization methods, unlocking faster training and rendering times. While demonstrating impressive reconstruction results and real-time interactivity, these methods are often limited to static scenes.

Video Diffusion Models

Diffusion models achieved state-of-the-art results in text-to-image generation (Saharia et al., 2022; Rombach et al., 2022; Ramesh et al., 2022; Podell et al., 2023), a line of work that has also been applied for text-to-video generation tasks (Ho et al., 2022; Blattmann et al., 2023b; a; Gupta et al., 2023; Girdhar et al., 2023; Bar-Tal et al., 2024). Despite impressive advancements in realism, text adherence, and temporal consistency, video diffusion models remain too slow for real-time applications. Our work extends this line of work and adapts it for real-time generation conditioned autoregressively on a history of past observations and actions.

Game Simulation and World Models

Several works attempted to train models for game simulation with actions inputs. Yang et al. (2023) build a diverse dataset of real-world and simulated videos and train a diffusion model to predict a continuation video given a previous video segment and a textual description of an action. Menapace et al. (2021) and Bruce et al. (2024) focus on unsupervised learning of actions from videos. Menapace et al. (2024) converts textual prompts to game states, which are later converted to a 3D representation using NeRF. Unlike these works, we focus on interactive playable real-time simulation, and demonstrate robustness over long-horizon trajectories. We leverage an RL agent to explore the game environment and create rollouts of observations and interactions for training our interactive game model. Another line of work explored learning a predictive model of the environment and using it for training an RL agent. Ha & Schmidhuber (2018) train a Variational Auto-Encoder (Kingma & Welling, 2014) to encode game frames into a latent vector, and then use an RNN to mimic the VizDoom game environment, training on random rollouts from a random policy (i.e., selecting an action at random). Then a controller policy is learned by playing within the 「hallucinated」 environment. Hafner et al. (2020) demonstrate that an RL agent can be trained entirely on episodes generated by a learned world model in latent space. Also close to our work is Kim et al. (2020), which uses an LSTM architecture for modeling the world state, coupled with a convolutional decoder for producing output frames and jointly trained under an adversarial objective. While this approach seems to produce reasonable results for simple games like PacMan, it struggles with simulating the complex environment of VizDoom and produces blurry samples. In contrast, GameNGen is able to generate samples comparable to those of the original game; see Figure 2. Finally, concurrently with our work, Alonso et al. (2024) train a diffusion world model to predict the next observation given observation history, and iteratively train the world model and an RL model on Atari games.

DOOM

When DOOM was released in 1993, it revolutionized the gaming industry. Introducing groundbreaking 3D graphics technology, it became a cornerstone of the first-person shooter genre, influencing countless other games. DOOM was studied by numerous research works. It provides an open-source implementation and a native resolution that is low enough for small-sized models to simulate while being complex enough to be a challenging test case. Finally, the authors have spent countless youth hours with the game. It was a trivial choice to use it in this work.

7 Discussion

Summary. We introduced GameNGen and demonstrated that high-quality real-time gameplay at 20 frames per second is possible on a neural model. We also provided a recipe for converting an interactive piece of software such as a computer game into a neural model.

Limitations. GameNGen suffers from a limited amount of memory. The model only has access to a little over 3 seconds of history, so it’s remarkable that much of the game logic is persisted for drastically longer time horizons. While some of the game state is persisted through screen pixels (e.g., ammo and health tallies, available weapons, etc.), the model likely learns strong heuristics that allow meaningful generalizations. For example, from the rendered view, the model learns to infer the player’s location, and from the ammo and health tallies, the model might infer whether the player has already been through an area and defeated the enemies there. That said, it’s easy to create situations where this context length is not enough. Continuing to increase the context size with our existing architecture yields only marginal benefits (Section 5.2.1), and the model’s short context length remains an important limitation. The second important limitation is the remaining differences between the agent’s behavior and those of human players. For example, our agent, even at the end of training, still does not explore all of the game’s locations and interactions, leading to erroneous behavior in those cases.

Future Work. We demonstrate GameNGen on the classic game DOOM. It would be interesting to test it on other games or more generally on other interactive software systems. We note that nothing in our technique is DOOM specific except for the reward function for the RL-agent. We plan on addressing that in future work. While GameNGen manages to maintain game state accurately, it isn’t perfect, as per the discussion above. A more sophisticated architecture might be needed to mitigate these issues. GameNGen currently has a limited capability to leverage more than a minimal amount of memory. Experimenting with further expanding the memory effectively could be critical for more complex games/software. GameNGen runs at 20 or 50 FPS22Faster than the original game DOOM ran on some of the authors』 80386 machines at the time! on a TPUv5. It would be interesting to experiment with further optimization techniques to get it to run at higher frame rates and on consumer hardware.

Towards a New Paradigm for Interactive Video Games. Today, video games are programmed by humans. GameNGen is a proof-of-concept for one part of a new paradigm where games are weights of a neural model, not lines of code. GameNGen shows that an architecture and model weights exist such that a neural model can effectively run a complex game (DOOM) interactively on existing hardware. While many important questions remain, we are hopeful that this paradigm could have important benefits. For example, the development process for video games under this new paradigm might be less costly and more accessible, whereby games could be developed and edited via textual descriptions or example images. A small part of this vision, namely creating modifications or novel behaviors for existing games, might be achievable in the shorter term. For example, we might be able to convert a set of frames into a new playable level or create a new character just based on example images, without having to author code. Other advantages of this new paradigm include strong guarantees on frame rates and memory footprints. We have not experimented with these directions yet and much more work is required here, but we are excited to try! Hopefully, this small step will someday contribute to a meaningful improvement in people’s experience with video games, or maybe even more generally, in day-to-day interactions with interactive software systems.

Acknowledgements

We’d like to extend a huge thank you to Eyal Segalis, Eyal Molad, Matan Kalman, Nataniel Ruiz, Amir Hertz, Matan Cohen, Yossi Matias, Yael Pritch, Danny Lumen, Valerie Nygaard, the Theta Labs and Google Research teams, and our families for insightful feedback, ideas, suggestions, and support.

Contribution

- Dani Valevski: Developed much of the codebase, tuned parameters and details across the system, added autoencoder fine-tuning, agent training, and distillation.

- Yaniv Leviathan: Proposed project, method, and architecture, developed the initial implementation, key contributor to implementation and writing.

- Moab Arar: Led auto-regressive stabilization with noise-augmentation, many of the ablations, and created the dataset of human-play data.

- Shlomi Fruchter: Proposed project, method, and architecture. Project leadership, initial implementation using DOOM, main manuscript writing, evaluation metrics, random policy data pipeline.

Correspondence to shlomif@google.com and leviathan@google.com.

References

- Akenine-Möller et al. (2018) Tomas Akenine-Möller, Eric Haines, and Naty Hoffman. Real-Time Rendering, Fourth Edition. A. K. Peters, Ltd., USA, 4th edition, 2018. ISBN 0134997832.

- Alonso et al. (2024) Eloi Alonso, Adam Jelley, Vincent Micheli, Anssi Kanervisto, Amos Storkey, Tim Pearce, and François Fleuret. Diffusion for world modeling: Visual details matter in Atari, 2024.

- Bar-Tal et al. (2024) Omer Bar-Tal, Hila Chefer, Omer Tov, Charles Herrmann, Roni Paiss, Shiran Zada, Ariel Ephrat, Junhwa Hur, Guanghui Liu, Amit Raj, Yuanzhen Li, Michael Rubinstein, Tomer Michaeli, Oliver Wang, Deqing Sun, Tali Dekel, and Inbar Mosseri. Lumiere: A space-time diffusion model for video generation, 2024. URL: [1](https://arxiv.org/abs/2401.12945).

- Blattmann et al. (2023a) Andreas Blattmann, Tim Dockhorn, Sumith Kulal, Daniel Mendelevitch, Maciej Kilian, Dominik Lorenz, Yam Levi, Zion English, Vikram Voleti, Adam Letts, Varun Jampani, and Robin Rombach. Stable video diffusion: Scaling latent video diffusion models to large datasets, 2023a. URL: [2](https://arxiv.org/abs/2311.15127).

- Blattmann et al. (2023b) Andreas Blattmann, Robin Rombach, Huan Ling, Tim Dockhorn, Seung Wook Kim, Sanja Fidler, and Karsten Kreis. Align your latents: High-resolution video synthesis with latent diffusion models, 2023b. URL: [3](https://arxiv.org/abs/2304.08818).

- Brooks et al. (2024) Tim Brooks, Bill Peebles, Connor Holmes, Will DePue, Yufei Guo, Li Jing, David Schnurr, Joe Taylor, Troy Luhman, Eric Luhman, Clarence Ng, Ricky Wang, and Aditya Ramesh. Video generation models as world simulators, 2024. URL: [4](https://openai.com/research/video-generation-models-as-world-simulators).

- Bruce et al. (2024) Jake Bruce, Michael Dennis, Ashley Edwards, Jack Parker-Holder, Yuge Shi, Edward Hughes, Matthew Lai, Aditi Mavalankar, Richie Steigerwald, Chris Apps, Yusuf Aytar, Sarah Bechtle, Feryal Behbahani, Stephanie Chan, Nicolas Heess, Lucy Gonzalez, Simon Osindero, Sherjil Ozair, Scott Reed, Jingwei Zhang, Konrad Zolna, Jeff Clune, Nando de Freitas, Satinder Singh, and Tim Rocktäschel. Genie: Generative interactive environments, 2024. URL: [5](https://arxiv.org/abs/2402.15391).

- Girdhar et al. (2023) Rohit Girdhar, Mannat Singh, Andrew Brown, Quentin Duval, Samaneh Azadi, Sai Saketh Rambhatla, Akbar Shah, Xi Yin, Devi Parikh, and Ishan Misra. Emu video: Factorizing text-to-video generation by explicit image conditioning, 2023. URL: [6](https://arxiv.org/abs/2311.10709).

- Gupta et al. (2023) Agrim Gupta, Lijun Yu, Kihyuk Sohn, Xiuye Gu, Meera Hahn, Li Fei-Fei, Irfan Essa, Lu Jiang, and José Lezama. Photorealistic video generation with diffusion models, 2023. URL: [7](https://arxiv.org/abs/2312.06662).

- Ha & Schmidhuber (2018) David Ha and Jürgen Schmidhuber. World models, 2018.

- Hafner et al. (2020) Danijar Hafner, Timothy Lillicrap, Jimmy Ba, and Mohammad Norouzi. Dream to control: Learning behaviors by latent imagination, 2020. URL: [8](https://arxiv.org/abs/1912.01603).

- Ho & Salimans (2022) Jonathan Ho and Tim Salimans. Classifier-free diffusion guidance, 2022. URL: [9](https://arxiv.org/abs/2207.12598).

- Ho et al. (2021) Jonathan Ho, Chitwan Saharia, William Chan, David J Fleet, Mohammad Norouzi, and Tim Salimans. Cascaded diffusion models for high fidelity image generation. arXiv preprint arXiv:2106.15282, 2021.

- Ho et al. (2022) Jonathan Ho, William Chan, Chitwan Saharia, Jay Whang, Ruiqi Gao, Alexey A. Gritsenko, Diederik P. Kingma, Ben Poole, Mohammad Norouzi, David J. Fleet, and Tim Salimans. Imagen video: High definition video generation with diffusion models. ArXiv, abs/2210.02303, 2022. URL: [10](https://api.semanticscholar.org/CorpusID:252715883).

- Kerbl et al. (2023) Bernhard Kerbl, Georgios Kopanas, Thomas Leimkühler, and George Drettakis. 3d gaussian splatting for real-time radiance field rendering. ACM Transactions on Graphics, 42(4), July 2023. URL: [11](https://repo-sam.inria.fr/fungraph/3d-gaussian-splatting/).

- Kim et al. (2020) Seung Wook Kim, Yuhao Zhou, Jonah Philion, Antonio Torralba, and Sanja Fidler. Learning to Simulate Dynamic Environments with GameGAN. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Jun. 2020.

- Kingma & Welling (2014) Diederik P. Kingma and Max Welling. Auto-Encoding Variational Bayes. In 2nd International Conference on Learning Representations, ICLR 2014, Banff, AB, Canada, April 14-16, 2014, Conference Track Proceedings, 2014.

- Menapace et al. (2021) Willi Menapace, Stéphane Lathuilière, Sergey Tulyakov, Aliaksandr Siarohin, and Elisa Ricci. Playable video generation, 2021. URL: [12](https://arxiv.org/abs/2101.12195).

- Menapace et al. (2024) Willi Menapace, Aliaksandr Siarohin, Stéphane Lathuilière, Panos Achlioptas, Vladislav Golyanik, Sergey Tulyakov, and Elisa Ricci. Promptable game models: Text-guided game simulation via masked diffusion models. ACM Transactions on Graphics, 43(2):1–16, January 2024. doi: [10.1145/3635705](http://dx.doi.org/10.1145/3635705).

- Mildenhall et al. (2020) Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, and Ren Ng. Nerf: Representing scenes as neural radiance fields for view synthesis. In ECCV, 2020.

- Mnih et al. (2015) Volodymyr Mnih, Koray Kavukcuoglu, David Silver, Andrei A. Rusu, Joel Veness, Marc G. Bellemare, Alex Graves, Martin A. Riedmiller, Andreas Kirkeby Fidjeland, Georg Ostrovski, Stig Petersen, Charlie Beattie, Amir Sadik, Ioannis Antonoglou, Helen King, Dharshan Kumaran, Daan Wierstra, Shane Legg, and Demis Hassabis. Human-level control through deep reinforcement learning. Nature, 518:529–533, 2015. URL: [13](https://api.semanticscholar.org/CorpusID:205242740).

- Petric & Milinkovic (2018) Danko Petric and Marija Milinkovic. Comparison between cs and jpeg in terms of image compression, 2018. URL: [14](https://arxiv.org/abs/1802.05114).

- Podell et al. (2023) Dustin Podell, Zion English, Kyle Lacey, Andreas Blattmann, Tim Dockhorn, Jonas Müller, Joe Penna, and Robin Rombach. Sdxl: Improving latent diffusion models for high-resolution image synthesis. arXiv preprint arXiv:2307.01952, 2023.

- Raffin et al. (2021) Antonin Raffin, Ashley Hill, Adam Gleave, Anssi Kanervisto, Maximilian Ernestus, and Noah Dormann. Stable-baselines3: Reliable reinforcement learning implementations. Journal of Machine Learning Research, 22(268):1–8, 2021. URL: [15](http://jmlr.org/papers/v22/20-1364.html).

- Ramesh et al. (2022) Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, and Mark Chen. Hierarchical text-conditional image generation with clip latents. arXiv preprint arXiv:2204.06125, 2022.

- Rombach et al. (2022) Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 10684–10695, 2022.

- Saharia et al. (2022) Chitwan Saharia, William Chan, Saurabh Saxena, Lala Li, Jay Whang, Emily L Denton, Kamyar Ghasemipour, Raphael Gontijo Lopes, Burcu Karagol Ayan, Tim Salimans, et al. Photorealistic text-to-image diffusion models with deep language understanding. Advances in Neural Information Processing Systems, 35:36479–36494, 2022.

- Salimans & Ho (2022a) Tim Salimans and Jonathan Ho. Progressive distillation for fast sampling of diffusion models. In The Tenth International Conference on Learning Representations, ICLR 2022, Virtual Event, April 25-29, 2022. OpenReview.net, 2022a. URL: [16](https://openreview.net/forum?id=TIdIXIpzhoI).

- Salimans & Ho (2022b) Tim Salimans and Jonathan Ho. Progressive distillation for fast sampling of diffusion models, 2022b. URL: [17](https://arxiv.org/abs/2202.00512).

- Schulman et al. (2017) John Schulman, Filip Wolski, Prafulla Dhariwal, Alec Radford, and Oleg Klimov. Proximal policy optimization algorithms. CoRR, abs/1707.06347, 2017. URL: [18](http://arxiv.org/abs/1707.06347).

- Shazeer & Stern (2018) Noam Shazeer and Mitchell Stern. Adafactor: Adaptive learning rates with sublinear memory cost. CoRR, abs/1804.04235, 2018. URL: [19](http://arxiv.org/abs/1804.04235).

- Shirley & Morley (2008) P. Shirley and R.K. Morley. Realistic Ray Tracing, Second Edition. Taylor & Francis, 2008. ISBN 9781568814612. URL: [20](https://books.google.ch/books?id=knpN6mnhJ8QC).

- Song et al. (2020) Jiaming Song, Chenlin Meng, and Stefano Ermon. Denoising diffusion implicit models. arXiv:2010.02502, October 2020. URL: [21](https://arxiv.org/abs/2010.02502).

- Song et al. (2022) Jiaming Song, Chenlin Meng, and Stefano Ermon. Denoising diffusion implicit models, 2022. URL: [22](https://arxiv.org/abs/2010.02502).

- Unterthiner et al. (2019) Thomas Unterthiner, Sjoerd van Steenkiste, Karol Kurach, Raphaël Marinier, Marcin Michalski, and Sylvain Gelly. FVD: A new metric for video generation. In Deep Generative Models for Highly Structured Data, ICLR 2019 Workshop, New Orleans, Louisiana, United States, May 6, 2019, 2019.

- Wang et al. (2023) Zhengyi Wang, Cheng Lu, Yikai Wang, Fan Bao, Chongxuan Li, Hang Su, and Jun Zhu. Prolificdreamer: High-fidelity and diverse text-to-3d generation with variational score distillation. arXiv preprint arXiv:2305.16213, 2023.

- Wydmuch et al. (2019) Marek Wydmuch, Michał Kempka, and Wojciech Jaśkowski. ViZDoom Competitions: Playing Doom from Pixels. IEEE Transactions on Games, 11(3):248–259, 2019. doi: [10.1109/TG.2018.2877047](http://dx.doi.org/10.1109/TG.2018.2877047).

- Yang et al. (2023) Mengjiao Yang, Yilun Du, Kamyar Ghasemipour, Jonathan Tompson, Dale Schuurmans, and Pieter Abbeel. Learning interactive real-world simulators. arXiv preprint arXiv:2310.06114, 2023.

- Yin et al. (2024) Tianwei Yin, Michaël Gharbi, Richard Zhang, Eli Shechtman, Frédo Durand, William T Freeman, and Taesung Park. One-step diffusion with distribution matching distillation. In CVPR, 2024.

- Zhang et al. (2018) Richard Zhang, Phillip Isola, Alexei A. Efros, Eli Shechtman, and Oliver Wang. The unreasonable effectiveness of deep features as a perceptual metric. In CVPR, 2018.

Appendix A Appendix

A.1 Samples

Figs. [8](https://arxiv.org/html/2408.14837v1#A1.F8), [9](https://arxiv.org/html/2408.14837v1#A1.F9), [10](https://arxiv.org/html/2408.14837v1#A1.F10), [11](https://arxiv.org/html/2408.14837v1#A1.F11) provide selected samples from GameNGen.