Diffusion Models Are Real-Time Game Engines

Authors: Dani Valevski (Google Research), Yaniv Leviathan (Google Research), Moab Arar (Tel Aviv University), Shlomi Fruchter (Google DeepMind)

ArXiv Link: https://arxiv.org/abs/2408.14837

Project Website: https://gamengen.github.io

Abstract

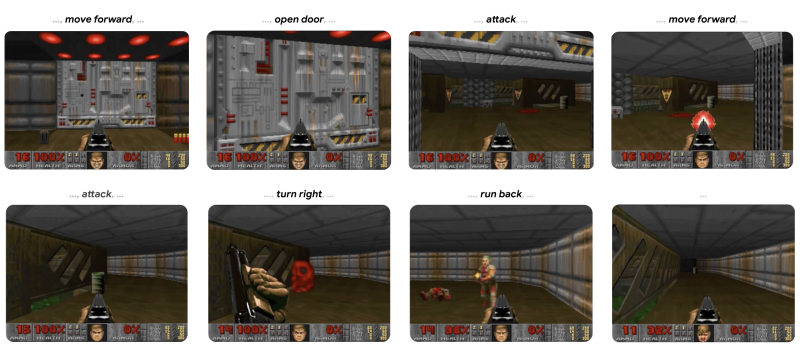

We present GameNGen, the first game engine powered entirely by a neural model that enables real-time interaction with a complex environment over long trajectories at high quality. GameNGen can interactively simulate the classic game DOOM at over 20 frames per second on a single TPU. Next frame prediction achieves a PSNR of 29.4, comparable to lossy JPEG compression. Human raters are only slightly better than random chance at distinguishing short clips of the game from clips of the simulation. GameNGen is trained in two phases: (1) an RL-agent learns to play the game and the training sessions are recorded, and (2) a diffusion model is trained to produce the next frame, conditioned on the sequence of past frames and actions. Conditioning augmentations enable stable auto-regressive generation over long trajectories.

See https://gamengen.github.io for demo videos.

1 Introduction

Computer games are manually crafted software systems centered around the following game loop: (1) gather user inputs, (2) update the game state, and (3) render it to screen pixels. This game loop, running at high frame rates, creates the illusion of an interactive virtual world for the player. Such game loops are classically run on standard computers, and while there have been many amazing attempts at running games on bespoke hardware (e.g. the iconic game DOOM has been run on kitchen appliances such as a toaster and a microwave, a treadmill, a camera, an iPod, and within the game of Minecraft, to name just a few examples - See https://www.reddit.com/r/itrunsdoom/), in all of these cases, the hardware is still emulating the manually written game software as-is. Furthermore, while vastly different game engines exist, the game state updates and rendering logic in all are composed of a set of manual rules, programmed or configured by hand.

In recent years, generative models made significant progress in producing images and videos conditioned on multi-modal inputs, such as text or images. At the forefront of this wave, diffusion models became the de-facto standard in media (i.e., non-language) generation, with works like Dall-E (Ramesh et al., 2022), Stable Diffusion (Rombach et al., 2022), and Sora (Brooks et al., 2024). At a glance, simulating the interactive worlds of video games may seem similar to video generation. However, interactive world simulation is more than just very fast video generation. The requirement to condition on a stream of input actions that is only available throughout the generation breaks some assumptions of existing diffusion model architectures. Notably, it requires generating frames autoregressively, which tends to be unstable and leads to sampling divergence (see section 3.2.1).

Several important works (Ha & Schmidhuber, 2018; Kim et al., 2020; Bruce et al., 2024) (see Section 6) simulate interactive video games with neural models. Nevertheless, most of these approaches are limited in respect to the complexity of the simulated games, simulation speed, stability over long time periods, or visual quality (see Figure 2). It is therefore natural to ask:

Can a neural model running in real-time simulate a complex game at high quality?

In this work, we demonstrate that the answer is yes. Specifically, we show that a complex video game, the iconic game DOOM, can be run on a neural network (an augmented version of the open Stable Diffusion v1.4 (Rombach et al., 2022)), in real-time, while achieving a visual quality comparable to that of the original game. While not an exact simulation, the neural model is able to perform complex game state updates, such as tallying health and ammo, attacking enemies, damaging objects, opening doors, and persisting the game state over long trajectories.

GameNGen answers one of the important questions on the road towards a new paradigm for game engines, one where games are automatically generated, similarly to how images and videos are generated by neural models in recent years. Key questions remain, such as how these neural game engines would be trained and how games would be effectively created in the first place, including how to best leverage human inputs. We are nevertheless extremely excited for the possibilities of this new paradigm.

2 Interactive World Simulation

An Interactive Environment Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{E}} consists of a space of latent states Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{S}} , a space of partial projections of the latent space , a partial projection function Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle V: \mathcal{S} \rightarrow \mathcal{O}} , a set of actions Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{A}} , and a transition probability function Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle p \left( s \,|\, a, s^{\prime} \right)} such that Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s, s^{\prime} \in \mathcal{S}, a \in \mathcal{A}} .

For example, in the case of the game DOOM, Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{S}} is the program’s dynamic memory contents, Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{O}} is the rendered screen pixels, Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle V} is the game’s rendering logic, Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{A}} is the set of key presses and mouse movements, and Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle p} is the program’s logic given the player’s input (including any potential non-determinism).

Given an input interactive environment Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{E}} , and an initial state Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s_{0} \in \mathcal{S}} , an Interactive World Simulation is a simulation distribution function Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle q \left( o_{n} \,|\, \{o_{< n}, a_{\leq n}\} \right), \; o_{i} \in \mathcal{O}, \; a_{i} \in \mathcal{A}} . Given a distance metric between observations Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle D: \mathcal{O} \times \mathcal{O} \rightarrow \mathbb{R}} , a policy, i.e., a distribution on agent actions given past actions and observations Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \pi \left( a_{n} \,|\, o_{< n}, a_{< n} \right)} , a distribution Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle S_{0}} on initial states, and a distribution Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle N_{0}} on episode lengths, the Interactive World Simulation objective consists of minimizing Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle E \left( D \left( o_{q}^{i}, o_{p}^{i} \right) \right)} where Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle n \sim N_{0}} , Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0 \leq i \leq n} , and Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle o_{q}^{i} \sim q, \; o_{p}^{i} \sim V(p)} are sampled observations from the environment and the simulation when enacting the agent’s policy Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \pi} . Importantly, the conditioning actions for these samples are always obtained by the agent interacting with the environment Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{E}} , while the conditioning observations can either be obtained from Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{E}} (the teacher forcing objective) or from the simulation (the auto-regressive objective).

We always train our generative model with the teacher forcing objective. Given a simulation distribution function Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle q} , the environment Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{E}} can be simulated by auto-regressively sampling observations.