Diffusion Models Are Real-Time Game Engines/zh: Difference between revisions

(Created page with "=== 4.1 代理训练 ===") |

No edit summary |

||

| (26 intermediate revisions by the same user not shown) | |||

| Line 14: | Line 14: | ||

== 1 介绍 == | == 1 介绍 == | ||

计算机游戏是围绕以下“游戏循环”手动制作的软件系统:(1) 收集用户输入,(2) 更新游戏状态,(3) 将其渲染为屏幕像素。这个游戏循环以很高的帧率运行,为玩家营造出一个交互式虚拟世界的假象。这种游戏循环通常在标准计算机上运行,尽管也有许多在定制硬件上运行游戏的惊人尝试(例如,标志性游戏《毁灭战士》曾在烤面包机、微波炉、跑步机、照相机、iPod 上运行,甚至在 Minecraft 游戏中运行——仅举几例,请参见 https://www.reddit.com/r/itrunsdoom/),但在所有这些情况下,硬件仍然是直接模拟手动编写的游戏软件。此外,尽管游戏引擎千差万别,但所有引擎中的游戏状态更新和渲染逻辑都是由一套手动编程或配置的规则组成的。 | 计算机游戏是围绕以下“游戏循环”手动制作的软件系统:(1) 收集用户输入,(2) 更新游戏状态,(3) 将其渲染为屏幕像素。这个游戏循环以很高的帧率运行,为玩家营造出一个交互式虚拟世界的假象。这种游戏循环通常在标准计算机上运行,尽管也有许多在定制硬件上运行游戏的惊人尝试(例如,标志性游戏《毁灭战士》曾在烤面包机、微波炉、跑步机、照相机、iPod 上运行,甚至在 Minecraft 游戏中运行——仅举几例,请参见 https://www.reddit.com/r/itrunsdoom/ ),但在所有这些情况下,硬件仍然是直接模拟手动编写的游戏软件。此外,尽管游戏引擎千差万别,但所有引擎中的游戏状态更新和渲染逻辑都是由一套手动编程或配置的规则组成的。 | ||

近年来,生成模型在根据文本或图像等多模态输入生成图像和视频方面取得了重大进展。在这一浪潮的前沿,扩散模型成为非语言媒体生成的事实标准,如 Dall-E(Ramesh 等人,[https://arxiv.org/html/2408.14837v1#bib.bib25 2022])、Stable Diffusion(Rombach 等人,[https://arxiv.org/html/2408.14837v1#bib.bib26 2022])和 Sora(Brooks 等人,[https://arxiv.org/html/2408.14837v1#bib.bib6 2024])。乍一看,模拟视频游戏的交互世界似乎与视频生成类似。然而,"交互式"世界模拟不仅仅是快速生成视频。因为生成过程中需要以输入动作流为条件,而输入动作流只能在生成时获取,这打破了现有扩散模型架构的一些假设。尤其是,它要求自回归地生成帧,这往往是不稳定的,并导致采样发散(见 [https://arxiv.org/html/2408.14837v1#S3.SS2.SSS1 3.2.1] 节)。 | 近年来,生成模型在根据文本或图像等多模态输入生成图像和视频方面取得了重大进展。在这一浪潮的前沿,扩散模型成为非语言媒体生成的事实标准,如 Dall-E(Ramesh 等人,[https://arxiv.org/html/2408.14837v1#bib.bib25 2022])、Stable Diffusion(Rombach 等人,[https://arxiv.org/html/2408.14837v1#bib.bib26 2022])和 Sora(Brooks 等人,[https://arxiv.org/html/2408.14837v1#bib.bib6 2024])。乍一看,模拟视频游戏的交互世界似乎与视频生成类似。然而,"交互式"世界模拟不仅仅是快速生成视频。因为生成过程中需要以输入动作流为条件,而输入动作流只能在生成时获取,这打破了现有扩散模型架构的一些假设。尤其是,它要求自回归地生成帧,这往往是不稳定的,并导致采样发散(见 [https://arxiv.org/html/2408.14837v1#S3.SS2.SSS1 3.2.1] 节)。 | ||

| Line 58: | Line 58: | ||

我们通过速度参数化训练模型,使得扩散损失最小化(Salimans & Ho, [https://arxiv.org/html/2408.14837v1#bib.bib29 2022b]): | 我们通过速度参数化训练模型,使得扩散损失最小化(Salimans & Ho, [https://arxiv.org/html/2408.14837v1#bib.bib29 2022b]): | ||

<math>\mathcal{L} = {{\mathbb{E}}_{t,\epsilon,T}\left\lbrack {\|{{v{(\epsilon,x_{0},t)}} - {v_{\theta^{\prime}}{(x_{t},t,{\{{\phi{(o_{i < n})}}\}},{\{{A_{emb}{(a_{i < n})}}\}})}}}\|}_{2}^{2} \right\rbrack}</math> (1) | |||

<math>\mathcal{L} = {{\mathbb{E}}_{t,\epsilon,T}\left\lbrack {\|{v{(\epsilon,x_{0},t)}} - {v_{\theta^{\prime}}{(x_{t},t,\{{\phi{(o_{i < n})}}\},\{{A_{emb}{(a_{i < n})}}\}})}}\|}_{2}^{2} \right\rbrack}</math> (1) | |||

其中 <math>T = {\{ o_{i \leq n},a_{i \leq n}\}} \sim \mathcal{T}_{ | 其中 <math>T = {\{ o_{i \leq n},a_{i \leq n}\}} \sim \mathcal{T}_{agent}</math>,<math>x_{0} = \phi{(o_{n})}</math>,<math>t \sim \mathcal{U}{(0,1)}</math>,<math>\epsilon \sim \mathcal{N}{(0,\mathbf{I})}</math>,<math>x_{t} = {\sqrt{\overline{\alpha}_{t}}x_{0} + \sqrt{1 - \overline{\alpha}_{t}}\epsilon}</math>,<math>v{(\epsilon,x_{0},t)} = {\sqrt{\overline{\alpha}_{t}}\epsilon - \sqrt{1 - \overline{\alpha}_{t}}x_{0}}</math>,而 <math>v_{\theta^{\prime}}</math> 是模型 <math>f_{\theta}</math> 的 v预测输出。噪声调度 <math>\overline{\alpha}_{t}</math> 是线性的,与 Rombach 等([https://arxiv.org/html/2408.14837v1#bib.bib26 2022])类似。 | ||

==== 3.2.1 使用噪声增强缓解自回归漂移 ==== | ==== 3.2.1 使用噪声增强缓解自回归漂移 ==== | ||

| Line 68: | Line 66: | ||

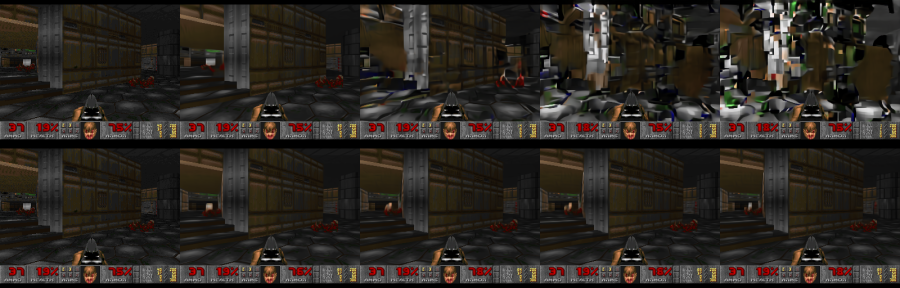

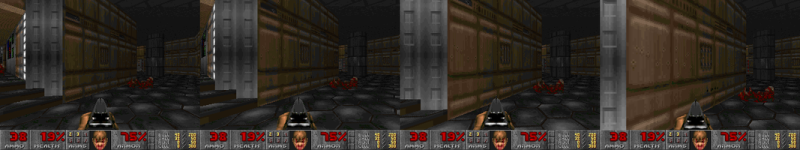

如图[https://arxiv.org/html/2408.14837v1#S3.F4 4]所示,教师强制训练和自动回归采样之间的领域偏移会导致误差积累和采样质量的快速下降。为了避免由于模型的自动回归应用而导致的这种偏差,我们在训练时向编码帧中添加不同程度的高斯噪声来扰动背景帧,并将噪声水平作为输入提供给模型,仿效 Ho 等人([https://arxiv.org/html/2408.14837v1#bib.bib13 2021])的方法。为此,我们对噪声水平 <math>\alpha</math> 进行均匀采样,直至最大值,然后对其进行离散化,并为每个区间学习一个嵌入(见图[https://arxiv.org/html/2408.14837v1#S3.F3 3])。这使得网络能够纠正前几帧中的采样信息,对于长期保持帧质量至关重要。在推理过程中,可以控制添加的噪声水平以最大化质量,尽管我们发现,即使不添加噪声,结果也显著改善。我们将在[https://arxiv.org/html/2408.14837v1#S5.SS2.SSS2 5.2.2]部分分析这种方法的影响。 | 如图[https://arxiv.org/html/2408.14837v1#S3.F4 4]所示,教师强制训练和自动回归采样之间的领域偏移会导致误差积累和采样质量的快速下降。为了避免由于模型的自动回归应用而导致的这种偏差,我们在训练时向编码帧中添加不同程度的高斯噪声来扰动背景帧,并将噪声水平作为输入提供给模型,仿效 Ho 等人([https://arxiv.org/html/2408.14837v1#bib.bib13 2021])的方法。为此,我们对噪声水平 <math>\alpha</math> 进行均匀采样,直至最大值,然后对其进行离散化,并为每个区间学习一个嵌入(见图[https://arxiv.org/html/2408.14837v1#S3.F3 3])。这使得网络能够纠正前几帧中的采样信息,对于长期保持帧质量至关重要。在推理过程中,可以控制添加的噪声水平以最大化质量,尽管我们发现,即使不添加噪声,结果也显著改善。我们将在[https://arxiv.org/html/2408.14837v1#S5.SS2.SSS2 5.2.2]部分分析这种方法的影响。 | ||

[[ | [[File:2408.14837.figure.4.png|center|thumb|900x900px|图 4:自回归漂移。顶部:我们展示了一个简单轨迹的每第 10 帧,共 50 帧,其中玩家没有移动。在 20-30 步后,质量迅速下降。底部:带有噪声增强的相同轨迹没有出现质量下降。]] | ||

==== 3.2.2 潜在变量解码器微调 ==== | ==== 3.2.2 潜在变量解码器微调 ==== | ||

| Line 96: | Line 94: | ||

=== 4.1 代理训练 === | === 4.1 代理训练 === | ||

代理模型使用 PPO(Schulman 等人,[https://arxiv.org/html/2408.14837v1#bib.bib30 2017])进行训练,采用简单的 CNN 作为特征网络,基于 Mnih 等人([https://arxiv.org/html/2408.14837v1#bib.bib21 2015])的方法。在 CPU 上使用 Stable Baselines 3 基础架构(Raffin 等人,[https://arxiv.org/html/2408.14837v1#bib.bib24 2021])进行训练。代理接收缩小后的帧图像和游戏地图,每个分辨率为 160x120。代理还可以访问其最近执行的 32 次动作。特征网络为每幅图像计算出大小为 512 的表示。PPO 的 actor 和 critic 是基于图像特征网络输出和过去动作序列连接的两层 MLP 头。我们使用 Vizdoom 环境(Wydmuch 等人,[https://arxiv.org/html/2408.14837v1#bib.bib37 2019])训练代理来玩游戏。我们并行运行了 8 个游戏,每个游戏的回放缓冲区大小为 512,折扣因子为 <math>\gamma = 0.99</math>,熵系数为 <math>0.1</math>。在每次迭代中,我们使用批量大小为 64 的数据进行 10 个时代的训练,学习率为 1e-4。我们总共执行了 1000 万个环境步骤。 | |||

=== 4.2 生成模型训练 === | |||

=== 4.2 | |||

我们使用 Stable Diffusion 1.4 的预训练检查点训练所有仿真模型,解冻所有 U-Net 参数。我们使用的批量大小为 128,恒定学习率为 2e-5,采用无权重衰减的 Adafactor 优化器(Shazeer & Stern,[https://arxiv.org/html/2408.14837v1#bib.bib31 2018]),以及梯度剪切为 1.0。我们将扩散损失参数化更改为 v预测(Salimans & Ho [https://arxiv.org/html/2408.14837v1#bib.bib28 2022a])。我们以 0.1 的概率去掉上下文帧条件,以便在推理过程中使用 CFG。我们使用 128 台 TPU-v5e 设备进行数据并行化训练。除非另有说明,本文中的所有结果均为 700,000 步训练后的结果。对于噪声增强(第[https://arxiv.org/html/2408.14837v1#S3.SS2.SSS1 3.2.1]节),我们使用的最大噪声水平为 0.7,并设有 10 个嵌入桶。在优化潜在解码器时,我们使用的批次大小为 2,048;其他训练参数与去噪器的参数相同。在训练数据方面,除非另有说明,我们使用了代理在强化学习训练期间的所有轨迹以及训练期间的评估数据。总体而言,我们生成了 9 亿帧用于训练。所有图像帧(在训练、推理和条件期间)的分辨率均为 320x240,并填充为 320x256。我们使用的上下文长度为 64(即向模型提供其自身的最后 64 次预测以及最后 64 次操作)。 | |||

== 5 结果 == | |||

== 5 | |||

=== 5.1 仿真质量 === | |||

=== 5.1 | |||

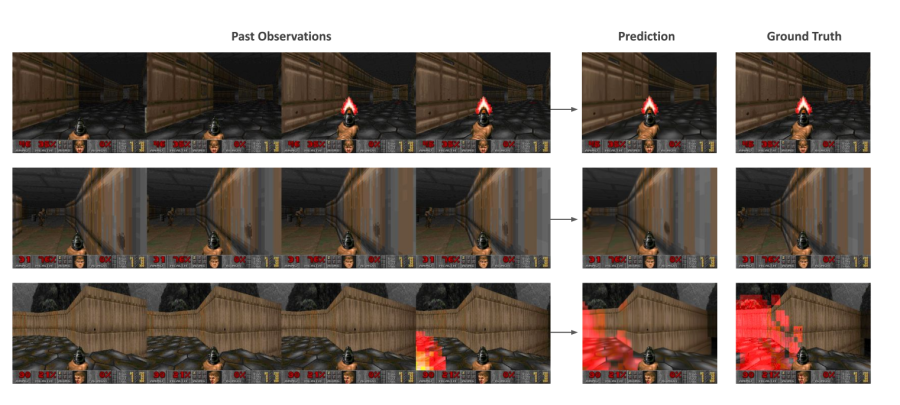

总体而言,从图像质量来看,我们的方法在长轨迹上实现了与原始游戏相当的仿真质量。对于短轨迹,人类评估者在区分仿真片段和实际游戏片段时,仅比随机猜测略胜一筹。 | |||

'''图像质量。''' 我们使用第[https://arxiv.org/html/2408.14837v1#S2 2]节中描述的教师强迫设置来测量LPIPS(Zhang 等人,[https://arxiv.org/html/2408.14837v1#bib.bib40 2018])和PSNR。在该设置中,我们对初始状态进行采样,并根据地面实况的过去观察轨迹预测单帧。在对5个不同级别的2048条随机轨迹进行评估时,我们的模型实现了<math>29.43</math>的PSNR值和<math>0.249</math>的LPIPS值。PSNR值与质量设置为20-30的有损JPEG压缩相似(Petric & Milinkovic,[https://arxiv.org/html/2408.14837v1#bib.bib22 2018])。图[https://arxiv.org/html/2408.14837v1#S5.F5 5]展示了模型预测和相应地面实况样本的示例。 | |||

''' | |||

[[File:2408.14837.figure.5.png|center|thumb|900x900px|图 5:模型预测与地面实况对比。仅显示过去观测上下文的最后 4 帧。]] | |||

[[File:2408.14837.figure.5.png|center|thumb|900x900px| | |||

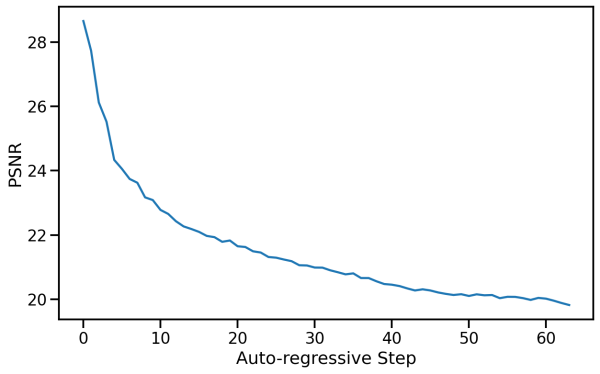

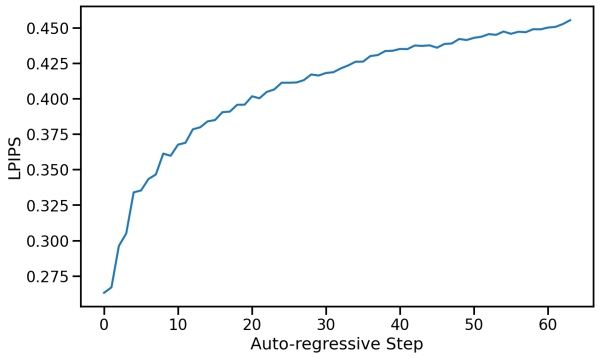

视频质量 我们使用第[https://arxiv.org/html/2408.14837v1#S2 2]节中描述的自回归设置,按照真实轨迹所定义的动作序列对帧进行迭代采样,同时将模型自身的过往预测作为条件。自回归采样时,预测轨迹和真实轨迹常常在几步后发生偏离,这主要是由于不同轨迹的帧间积累了少量不同的运动速度。因此,如图[https://arxiv.org/html/2408.14837v1#S5.F6 6]所示,每帧的PSNR和LPIPS值分别逐渐降低和增加。预测的轨迹在内容和图像质量方面仍与实际游戏相似,但每帧指标在捕捉这一点的能力上有限(自动回归生成的轨迹样本见附录[https://arxiv.org/html/2408.14837v1#A1.SS1 A.1])。 | |||

[[File:2408.14837.figure.6.1.png|center|thumb|600x600px|图 6:自回归评估。64步自回归过程中的PSNR指标。]] | |||

[[File:2408.14837.figure.6.1.png|center|thumb|600x600px| | |||

[[File:2408.14837.figure.6.2.png|center|thumb|600x600px|图 6:自回归评估。64 个自回归步骤的 LPIPS 指标]] | |||

[[File:2408.14837.figure.6.2.png|center|thumb|600x600px| | |||

因此,我们对512个随机保留的轨迹计算FVD(Unterthiner等人,[https://arxiv.org/html/2408.14837v1#bib.bib35 2019]),测量预测轨迹分布与真实值轨迹分布之间的距离,仿真的长度为16帧(0.8秒)和32帧(1.6秒)。对于16帧,我们的模型获得的FVD为<math>114.02</math>。对于32帧,我们的模型获得的FVD为<math>186.23</math>。 | |||

'''人类评估。''' 作为评估仿真质量的另一项标准,我们向 10 名评测员提供了 130 个随机短片段(长度为 1.6 秒和 3.2 秒),并排展示我们的仿真和真实游戏。评测员的任务是识别真实游戏(见附录[https://arxiv.org/html/2408.14837v1#A1.SS6 A.6]中的图[https://arxiv.org/html/2408.14837v1#A1.F14 14])。评测员在 1.6 秒和 3.2 秒的片段中,选择真实游戏而非仿真的比例分别为 58% 和 60%。 | |||

''' | |||

=== 5.2 消融实验 === | |||

=== 5.2 | |||

为了评估我们方法中不同组件的重要性,我们从评估数据集中采样轨迹,并计算真实值与预测帧之间的 LPIPS 和 PSNR 指标。 | |||

==== 5.2.1 上下文长度 ==== | |||

==== 5.2.1 | |||

我们通过训练使用<math>N \in \{ 1,2,4,8,16,32,64\}</math>的模型来评估改变条件上下文中过去观测值数量<math>N</math>的影响(请注意,我们的方法使用<math>N = 64</math>)。这影响了历史帧和动作的数量。我们在解码器保持冻结的情况下训练模型200,000步,并在5个级别的测试集轨迹上进行评估。结果见表[https://arxiv.org/html/2408.14837v1#S5.T1 1]。正如预期的那样,我们发现生成质量随着上下文长度的增加而提高。有趣的是,我们观察到,尽管最初(例如在1到2帧之间)提升较大,但很快就接近一个渐近线,进一步增加上下文长度只能带来微小的质量提升。这有些令人惊讶,因为即使在我们使用的最大上下文长度下,模型也只能访问略多于3秒的历史。值得注意的是,我们观察到大部分游戏状态会持续更长时间(见第[https://arxiv.org/html/2408.14837v1#S7 7]节)。虽然条件上下文长度是一个重要的限制,但表[https://arxiv.org/html/2408.14837v1#S5.T1 1]提示我们可能需要改变模型的架构,以有效支持更长的上下文,并更好地选择过去的帧作为条件,这将是我们未来的工作。 | |||

表 1:历史帧数量。我们在来自 5 个级别的 8912 个测试集示例中分析了用作上下文的历史帧数量。更多的帧通常会改善 PSNR 和 LPIPS 指标。 | |||

{| class="wikitable" | {| class="wikitable" | ||

! | ! 历史上下文长度 | ||

! PSNR <math>\uparrow</math> | ! PSNR <math>\uparrow</math> | ||

! LPIPS <math>\downarrow</math> | ! LPIPS <math>\downarrow</math> | ||

| Line 202: | Line 163: | ||

| <math>0.358 \pm 0.001</math> | | <math>0.358 \pm 0.001</math> | ||

|} | |} | ||

==== 5.2.2 噪声增强 ==== | |||

==== 5.2.2 | |||

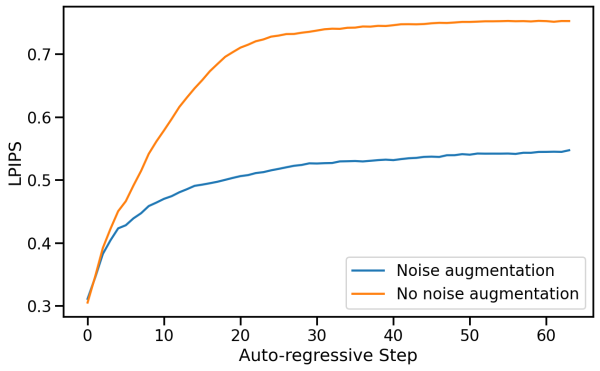

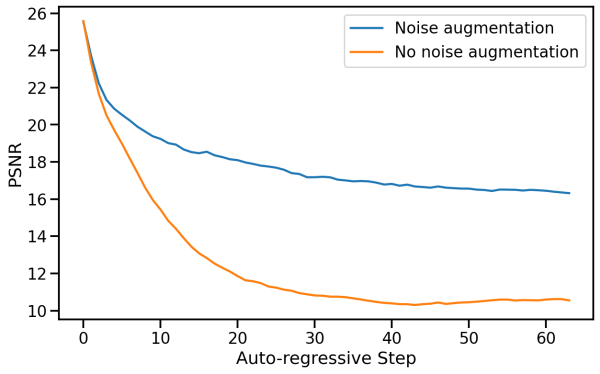

为了消除噪声增强的影响,我们训练了一个不添加噪声的模型。我们对标准噪声增强模型和不添加噪声的模型(经过 200,000 步训练后)进行自回归评估,并计算在随机保留的 512 条轨迹上预测帧与真实帧之间的 PSNR 和 LPIPS 指标。我们在图 [https://arxiv.org/html/2408.14837v1#S5.F7 7] 中报告了每个自回归步骤的平均指标值,最多可达 64 帧。 | |||

在没有噪声增强的情况下,与真实值相比,LPIPS 距离迅速增加,而 PSNR 下降,这表明仿真结果与真实值的偏差加大。 | |||

[[File:2408.14837.figure.7.1.png|center|thumb|600x600px|图 7:噪声增强的影响。图中显示了每个自回归步骤的 LPIPS 平均值(越低越好)。未使用噪声增强时,质量在 10-20 帧后迅速下降,而噪声增强可以防止这种情况。]] | |||

[[File:2408.14837.figure.7.1.png|center|thumb|600x600px| | |||

[[File:2408.14837.figure.7.2.png|center|thumb|600x600px|图 7:噪声增强的影响。图中显示了每个自回归步骤的 PSNR 平均值(越高越好)。不使用噪声增强时,质量在 10-20 帧后迅速下降。噪声增强可以防止这种情况。]] | |||

[[File:2408.14837.figure.7.2.png|center|thumb|600x600px| | |||

==== 5.2.3 代理执行 ==== | |||

==== 5.2.3 | |||

我们将代理生成的数据训练与使用随机策略生成的数据训练进行比较。对于随机策略,我们根据与观测结果无关的均匀分类分布对动作进行采样。我们通过对两个模型及其解码器进行 | |||

总体而言,我们观察到在随机轨迹上训练模型的效果出奇地好,但受到随机策略探索能力的限制。在比较单帧生成时,代理的效果稍好,PSNR 为 25.06,而随机策略为 24.42。在比较 3 秒自回归生成后的帧时,差距增大到 19.02 对 16.84。在手动操作模型时,我们发现某些区域对两者都很容易,而某些区域对两者都很困难,而在某些区域,代理的表现要好得多。基于此,我们根据它们与游戏起始位置的距离手动将 456 个例子分为三组:易、中等和难。我们观察到,在简单和困难集上,代理的表现仅略优于随机,而在中等集上,正如预期的那样,代理的表现要好得多(见表 [https://arxiv.org/html/2408.14837v1#S5.T2 2])。请参见附录 [https://arxiv.org/html/2408.14837v1#A1.SS5 A.5] 中的图 [https://arxiv.org/html/2408.14837v1#A1.F13 13],了解人类单次游戏的得分情况。 | |||

'''表 2:不同难度级别的表现。''' 我们比较了使用代理生成数据和随机生成数据训练的模型在简单、中等和困难数据集上的表现。简单和中等数据集各有 112 个样本,困难数据集有 232 个样本。在 3 秒后的单帧上计算每个轨迹的指标。 | |||

''' | |||

{| class="wikitable" | {| class="wikitable" | ||

! | ! 难度级别 | ||

! | ! 数据生成策略 | ||

! PSNR <math>\uparrow</math> | ! PSNR <math>\uparrow</math> | ||

! LPIPS <math>\downarrow</math> | ! LPIPS <math>\downarrow</math> | ||

|- | |- | ||

| | | 简单 | ||

| | | 代理 | ||

| <math>20.94 \pm 0.76</math> | | <math>20.94 \pm 0.76</math> | ||

| <math>0.48 \pm 0.01</math> | | <math>0.48 \pm 0.01</math> | ||

|- | |- | ||

| | | | ||

| | | 随机 | ||

| <math>20.20 \pm 0.83</math> | | <math>20.20 \pm 0.83</math> | ||

| <math>0.48 \pm 0.01</math> | | <math>0.48 \pm 0.01</math> | ||

|- | |- | ||

| | | 中等 | ||

| | | 代理 | ||

| <math>20.21 \pm 0.36</math> | | <math>20.21 \pm 0.36</math> | ||

| <math>0.50 \pm 0.01</math> | | <math>0.50 \pm 0.01</math> | ||

|- | |- | ||

| | | | ||

| | | 随机 | ||

| <math>16.50 \pm 0.41</math> | | <math>16.50 \pm 0.41</math> | ||

| <math>0.59 \pm 0.01</math> | | <math>0.59 \pm 0.01</math> | ||

|- | |- | ||

| | | 困难 | ||

| | | 代理 | ||

| <math>17.51 \pm 0.35</math> | | <math>17.51 \pm 0.35</math> | ||

| <math>0.60 \pm 0.01</math> | | <math>0.60 \pm 0.01</math> | ||

|- | |- | ||

| | | | ||

| | | 随机 | ||

| <math>15.39 \pm 0.43</math> | | <math>15.39 \pm 0.43</math> | ||

| <math>0.61 \pm 0.00</math> | | <math>0.61 \pm 0.00</math> | ||

|} | |} | ||

== 6 相关工作 == | |||

== 6 | |||

===== 交互式三维仿真 ===== | |||

===== | |||

模拟二维和三维环境的视觉和物理过程,并允许对其进行交互式探索,是计算机图形学中一个广泛发展的领域(Akenine-Möller等人,[https://arxiv.org/html/2408.14837v1#bib.bib1 2018])。像虚幻和Unity这样的游戏引擎是可以处理场景几何表示并根据用户交互渲染图像流的软件。游戏引擎负责跟踪所有世界状态,例如玩家的位置和移动、物体、角色动画和光照。它还负责跟踪游戏逻辑,例如完成游戏目标所获得的分数。电影和电视制作使用的光线追踪变体(Shirley和Morley,[https://arxiv.org/html/2408.14837v1#bib.bib32 2008]),对于实时应用来说过于缓慢且计算密集。相比之下,游戏引擎必须保持非常高的帧率(通常为30-60 FPS),因此依赖于高度优化的多边形光栅化,并且通常由GPU加速。诸如阴影、粒子和光照等物理效果通常使用高效的启发式方法来实现,而不是进行精确的物理仿真。 | |||

===== 神经三维仿真 ===== | |||

===== | |||

重建三维表示的神经方法在过去几年中取得了重大进展。NeRFs(Mildenhall 等人,[https://arxiv.org/html/2408.14837v1#bib.bib20 2020])使用深度神经网络对辐射场进行参数化,该网络针对从不同相机姿态拍摄的一组图像的特定场景进行了专门优化。训练完成后,可通过体积渲染方法对场景的新视角进行采样。Gaussian Splatting(Kerbl 等人,[https://arxiv.org/html/2408.14837v1#bib.bib15 2023])方法建立在 NeRFs 的基础上,但使用三维高斯和改进的光栅化方法来表示场景,从而实现更快的训练和渲染速度。尽管这些方法展示了令人印象深刻的重建结果和实时交互性,但通常仅限于静态场景。 | |||

===== 视频扩散模型 ===== | |||

===== | |||

扩散模型在文本到图像生成中取得了最先进的成果(Saharia 等人,[https://arxiv.org/html/2408.14837v1#bib.bib27 2022];Rombach 等人,[https://arxiv.org/html/2408.14837v1#bib.bib26 2022];Ramesh 等人,[https://arxiv.org/html/2408.14837v1#bib.bib25 2022];Podell 等人,[https://arxiv.org/html/2408.14837v1#bib.bib23 2023]),这一研究领域也被应用于文本到视频生成任务(Ho 等人,[https://arxiv.org/html/2408.14837v1#bib.bib14 2022];Blattmann 等人,[https://arxiv.org/html/2408.14837v1#bib.bib5 2023b];[https://arxiv.org/html/2408.14837v1#bib.bib4 a];Gupta 等人,[https://arxiv.org/html/2408.14837v1#bib.bib9 2023];Girdhar 等人,[https://arxiv.org/html/2408.14837v1#bib.bib8 2023];Bar-Tal 等人,[https://arxiv.org/html/2408.14837v1#bib.bib3 2024])。尽管在逼真性、文本依从性和时间一致性方面取得了显著进展,但视频扩散模型对于实时应用来说仍然过于缓慢。我们的工作扩展了这一研究,并使其适用于基于过去观察和动作历史的自回归条件下的实时生成。 | |||

===== 游戏模拟与世界模型 ===== | |||

===== | |||

有几项研究试图利用动作输入来训练游戏仿真模型。Yang 等人([https://arxiv.org/html/2408.14837v1#bib.bib38 2023])建立了一个包含真实世界和模拟视频的多样化数据集,并训练了一个扩散模型,根据前一个视频片段和动作的文字描述来预测后续视频。Menapace 等人([https://arxiv.org/html/2408.14837v1#bib.bib18 2021])和 Bruce 等人([https://arxiv.org/html/2408.14837v1#bib.bib7 2024])专注于从视频中无监督地学习动作。Menapace 等人([https://arxiv.org/html/2408.14837v1#bib.bib19 2024])将文本提示转换为游戏状态,然后使用 NeRF 将其转换为三维表示。与这些研究不同,我们专注于“交互式可玩实时仿真”,并展示了长时间跨度轨迹的鲁棒性。我们利用强化学习代理探索游戏环境,并创建观察和交互的轨迹以训练我们的交互式游戏模型。另一项研究探索了学习环境的预测模型,并将其用于训练强化学习代理。Ha 和 Schmidhuber([https://arxiv.org/html/2408.14837v1#bib.bib10 2018])训练了变分自动编码器(Kingma & Welling,[https://arxiv.org/html/2408.14837v1#bib.bib17 2014]),将游戏帧编码为潜在向量,然后使用 RNN 模拟 VizDoom 游戏环境,从随机策略(即随机选择动作)的随机轨迹中进行训练。然后通过在“虚构”环境中进行游戏来学习控制器策略。Hafner 等人([https://arxiv.org/html/2408.14837v1#bib.bib11 2020])证明,强化学习代理可以完全在由潜在空间中的学习世界模型生成的情节上进行训练。与我们的工作也接近的是 Kim 等人([https://arxiv.org/html/2408.14837v1#bib.bib16 2020]),他们使用 LSTM 架构来建模世界状态,同时结合卷积解码器生成输出帧,并在对抗性目标下联合训练。虽然这种方法对《吃豆人》等简单游戏似乎给出了合理的结果,但在模拟 VizDoom 的复杂环境时会产生模糊样本。相比之下,GameNGen 能够生成与原始游戏相当的样本;见图 [https://arxiv.org/html/2408.14837v1#S1.F2 2]。最后,与我们的工作同步进行的还有 Alonso 等人([https://arxiv.org/html/2408.14837v1#bib.bib2 2024])训练的扩散世界模型,该模型可根据观察历史预测下一步观察,并在雅达利游戏上迭代训练世界模型和强化学习模型。 | |||

===== DOOM ===== | ===== DOOM ===== | ||

DOOM 于 1993 年发布,掀起了游戏行业的一场革命。它引入了开创性的 3D 图形技术,成为第一人称射击类游戏的基石,影响了无数其他游戏。许多研究工作都对 DOOM 进行了研究。它提供了开放源码的实现和足够低的原生分辨率,适合小型模型的模拟,同时也足够复杂,可以作为一个具有挑战性的测试案例。最后,作者在这款游戏上花费了无数的青春时光,因此在这项工作中使用它是一个显而易见的选择。 | |||

== 7 讨论 == | |||

== 7 | |||

'''总结。''' 我们介绍了''GameNGen'',并证明在神经模型上可以实现每秒20帧的高质量实时游戏。我们还提供了将计算机游戏等交互式软件转换为神经模型的方法。 | |||

''' | |||

'''局限性。''' GameNGen 存在内存有限的情况。该模型只能访问稍超过3秒的历史记录,因此许多游戏逻辑能够在更长的时间跨度内被保存,这一点令人惊讶。虽然部分游戏状态是通过屏幕像素(如弹药和健康统计、可用武器等)来持久化的,但模型可能学习了强大的启发式方法,从而能够进行有意义的概括。例如,从渲染视图中,模型可以学习推断玩家的位置,而从弹药和健康统计中,模型可能推断玩家是否已经穿过某个区域并击败了那里的敌人。尽管如此,很容易出现上下文长度不足的情况。在现有架构下继续增加上下文大小只能带来微小的好处(第[https://arxiv.org/html/2408.14837v1#S5.SS2.SSS1 5.2.1]节),模型的上下文长度偏短仍然是一个重要限制。第二个重要限制是代理的行为与人类玩家的行为之间仍然存在差异。例如,即使在训练结束后,我们的代理仍然无法探索游戏中的所有地点和互动,从而导致在这些情况下出现错误行为。 | |||

''' | |||

'''未来工作''' 我们在经典游戏《DOOM》上演示了''GameNGen''。在其他游戏或更广泛的交互式软件系统上进行测试会非常有趣。我们注意到,除了强化学习代理的奖励函数外,我们的技术没有任何内容是《DOOM》特有的。我们计划在未来的工作中解决这个问题。虽然''GameNGen''能够准确地维护游戏状态,但如上文所述,它并不完美。可能需要一个更复杂的架构来缓解这些问题。''GameNGen''目前只能利用有限的内存。尝试进一步有效地扩展内存对于更复杂的游戏或软件来说至关重要。''GameNGen''在TPUv5上的运行速度为20或50 FPS,比原始游戏《DOOM》当时在一些作者的80386机器上的运行速度还要快!在消费者硬件上尝试进一步的优化技术以提高帧率将是很有趣的。 | |||

''' | |||

'''迈向互动视频游戏的新范式''' 如今,视频游戏是由人类''编程''的。而''GameNGen''则是新范式的一部分概念验证,在这一新范式中,游戏被视为神经模型的权重,而不是代码行。''GameNGen''展示了一种架构和模型权重,使神经模型能够有效地在现有硬件上互动运行复杂的游戏(如 DOOM)。虽然仍有许多重要问题,但我们希望这种范式能带来显著益处。例如,在这种新范式下,视频游戏的开发过程可能成本更低且更易于获取,可以通过文字描述或示例图像来开发和编辑游戏。这个愿景的一小部分,即对现有游戏进行修改或创造新的行为,也许在短期内就能实现。例如,我们也许可以将一组帧转换成一个新的可玩关卡,或根据示例图像创建一个新角色,而无需编写代码。这种新范式的其他优点还包括对帧率和内存占用的强有力保证。我们尚未在这些方向上进行实验,并且还需要做更多的工作,但我们很高兴尝试!希望有朝一日,这一小步能为改善人们的视频游戏体验做出重大贡献,甚至更广泛地说,改善人们与互动软件系统的日常交互体验。 | |||

''' | |||

== 致谢 == | |||

= | |||

我们衷心感谢 Eyal Segalis、Eyal Molad、Matan Kalman、Nataniel Ruiz、Amir Hertz、Matan Cohen、Yossi Matias、Yael Pritch、Danny Lumen、Valerie Nygaard、Theta Labs 和 Google Research 团队以及我们的家人,感谢他们提供的深刻反馈、创意、建议和支持。 | |||

== 贡献 == | |||

= | |||

* '''Dani Valevski''': 开发了大部分代码库,调整了整个系统的参数和细节,增加了自动编码器微调、代理训练和蒸馏功能。 | |||

* '''Dani Valevski''': | * '''亚尼夫·列维坦''': 提出了项目、方法和架构,开发了初始实现,是实现和撰写的主要贡献者。 | ||

* ''' | * '''Moab Arar''': 领导了自回归稳定化与噪声增强,进行了许多消融实验,并创建了人类游戏数据的数据集。 | ||

* '''Moab Arar''': | * '''Shlomi Fruchter''': 提出了项目、方法和架构。负责项目领导、使用 DOOM 的初始实现、主要手稿的撰写、评估指标、随机策略数据管道。 | ||

* '''Shlomi Fruchter''': | |||

如需联系,请发送邮件至 <code>shlomif@google.com</code> 和 <code>leviathan@google.com</code>。 | |||

== 参考文献 == | |||

= | |||

* Akenine-Möller et al. (2018) Tomas Akenine-Möller, Eric Haines, and Naty Hoffman. ''Real-Time Rendering, Fourth Edition''. A. K. Peters, Ltd., USA, 4th edition, 2018. ISBN 0134997832. | * Akenine-Möller et al. (2018) Tomas Akenine-Möller, Eric Haines, and Naty Hoffman. ''Real-Time Rendering, Fourth Edition''. A. K. Peters, Ltd., USA, 4th edition, 2018. ISBN 0134997832. | ||

* Alonso et al. (2024) Eloi Alonso, Adam Jelley, Vincent Micheli, Anssi Kanervisto, Amos Storkey, Tim Pearce, and François Fleuret. Diffusion for world modeling: Visual details matter in Atari, 2024. | * Alonso et al. (2024) Eloi Alonso, Adam Jelley, Vincent Micheli, Anssi Kanervisto, Amos Storkey, Tim Pearce, and François Fleuret. Diffusion for world modeling: Visual details matter in Atari, 2024. | ||

* Bar-Tal et al. (2024) Omer Bar-Tal, Hila Chefer, Omer Tov, Charles Herrmann, Roni Paiss, Shiran Zada, Ariel Ephrat, Junhwa Hur, Guanghui Liu, Amit Raj, Yuanzhen Li, Michael Rubinstein, Tomer Michaeli, Oliver Wang, Deqing Sun, Tali Dekel, and Inbar Mosseri. Lumiere: A space-time diffusion model for video generation, 2024. URL: [https://arxiv.org/abs/2401.12945](https://arxiv.org/abs/2401.12945). | * Bar-Tal et al. (2024) Omer Bar-Tal, Hila Chefer, Omer Tov, Charles Herrmann, Roni Paiss, Shiran Zada, Ariel Ephrat, Junhwa Hur, Guanghui Liu, Amit Raj, Yuanzhen Li, Michael Rubinstein, Tomer Michaeli, Oliver Wang, Deqing Sun, Tali Dekel, and Inbar Mosseri. Lumiere: A space-time diffusion model for video generation, 2024. URL: [https://arxiv.org/abs/2401.12945](https://arxiv.org/abs/2401.12945). | ||

* Blattmann et al. (2023a) Andreas Blattmann, Tim Dockhorn, Sumith Kulal, Daniel Mendelevitch, Maciej Kilian, Dominik Lorenz, Yam Levi, Zion English, Vikram Voleti, Adam Letts, Varun Jampani, and Robin Rombach. Stable video diffusion: Scaling latent video diffusion models to large datasets, 2023a. URL: [https://arxiv.org/abs/2311.15127](https://arxiv.org/abs/2311.15127). | * Blattmann et al. (2023a) Andreas Blattmann, Tim Dockhorn, Sumith Kulal, Daniel Mendelevitch, Maciej Kilian, Dominik Lorenz, Yam Levi, Zion English, Vikram Voleti, Adam Letts, Varun Jampani, and Robin Rombach. Stable video diffusion: Scaling latent video diffusion models to large datasets, 2023a. URL: [https://arxiv.org/abs/2311.15127](https://arxiv.org/abs/2311.15127). | ||

* Blattmann et al. (2023b) Andreas Blattmann, Robin Rombach, Huan Ling, Tim Dockhorn, Seung Wook Kim, Sanja Fidler, and Karsten Kreis. Align your latents: High-resolution video synthesis with latent diffusion models, 2023b. URL: [https://arxiv.org/abs/2304.08818](https://arxiv.org/abs/2304.08818). | * Blattmann et al. (2023b) Andreas Blattmann, Robin Rombach, Huan Ling, Tim Dockhorn, Seung Wook Kim, Sanja Fidler, and Karsten Kreis. Align your latents: High-resolution video synthesis with latent diffusion models, 2023b. URL: [https://arxiv.org/abs/2304.08818](https://arxiv.org/abs/2304.08818). | ||

* Brooks et al. (2024) Tim Brooks, Bill Peebles, Connor Holmes, Will DePue, Yufei Guo, Li Jing, David Schnurr, Joe Taylor, Troy Luhman, Eric Luhman, Clarence Ng, Ricky Wang, and Aditya Ramesh. Video generation models as world simulators, 2024. URL: [https://openai.com/research/video-generation-models-as-world-simulators](https://openai.com/research/video-generation-models-as-world-simulators). | * Brooks et al. (2024) Tim Brooks, Bill Peebles, Connor Holmes, Will DePue, Yufei Guo, Li Jing, David Schnurr, Joe Taylor, Troy Luhman, Eric Luhman, Clarence Ng, Ricky Wang, and Aditya Ramesh. Video generation models as world simulators, 2024. URL: [https://openai.com/research/video-generation-models-as-world-simulators](https://openai.com/research/video-generation-models-as-world-simulators). | ||

* Bruce et al. (2024) Jake Bruce, Michael Dennis, Ashley Edwards, Jack Parker-Holder, Yuge Shi, Edward Hughes, Matthew Lai, Aditi Mavalankar, Richie Steigerwald, Chris Apps, Yusuf Aytar, Sarah Bechtle, Feryal Behbahani, Stephanie Chan, Nicolas Heess, Lucy Gonzalez, Simon Osindero, Sherjil Ozair, Scott Reed, Jingwei Zhang, Konrad Zolna, Jeff Clune, Nando de Freitas, Satinder Singh, and Tim Rocktäschel. Genie: Generative interactive environments, 2024. URL: [https://arxiv.org/abs/2402.15391](https://arxiv.org/abs/2402.15391). | * Bruce et al. (2024) Jake Bruce, Michael Dennis, Ashley Edwards, Jack Parker-Holder, Yuge Shi, Edward Hughes, Matthew Lai, Aditi Mavalankar, Richie Steigerwald, Chris Apps, Yusuf Aytar, Sarah Bechtle, Feryal Behbahani, Stephanie Chan, Nicolas Heess, Lucy Gonzalez, Simon Osindero, Sherjil Ozair, Scott Reed, Jingwei Zhang, Konrad Zolna, Jeff Clune, Nando de Freitas, Satinder Singh, and Tim Rocktäschel. Genie: Generative interactive environments, 2024. URL: [https://arxiv.org/abs/2402.15391](https://arxiv.org/abs/2402.15391). | ||

* Girdhar et al. (2023) Rohit Girdhar, Mannat Singh, Andrew Brown, Quentin Duval, Samaneh Azadi, Sai Saketh Rambhatla, Akbar Shah, Xi Yin, Devi Parikh, and Ishan Misra. Emu video: Factorizing text-to-video generation by explicit image conditioning, 2023. URL: [https://arxiv.org/abs/2311.10709](https://arxiv.org/abs/2311.10709). | * Girdhar et al. (2023) Rohit Girdhar, Mannat Singh, Andrew Brown, Quentin Duval, Samaneh Azadi, Sai Saketh Rambhatla, Akbar Shah, Xi Yin, Devi Parikh, and Ishan Misra. Emu video: Factorizing text-to-video generation by explicit image conditioning, 2023. URL: [https://arxiv.org/abs/2311.10709](https://arxiv.org/abs/2311.10709). | ||

* Gupta et al. (2023) Agrim Gupta, Lijun Yu, Kihyuk Sohn, Xiuye Gu, Meera Hahn, Li Fei-Fei, Irfan Essa, Lu Jiang, and José Lezama. Photorealistic video generation with diffusion models, 2023. URL: [https://arxiv.org/abs/2312.06662](https://arxiv.org/abs/2312.06662). | * Gupta et al. (2023) Agrim Gupta, Lijun Yu, Kihyuk Sohn, Xiuye Gu, Meera Hahn, Li Fei-Fei, Irfan Essa, Lu Jiang, and José Lezama. Photorealistic video generation with diffusion models, 2023. URL: [https://arxiv.org/abs/2312.06662](https://arxiv.org/abs/2312.06662). | ||

* Ha & Schmidhuber (2018) David Ha and Jürgen Schmidhuber. World models, 2018. | * Ha & Schmidhuber (2018) David Ha and Jürgen Schmidhuber. World models, 2018. | ||

* Hafner et al. (2020) Danijar Hafner, Timothy Lillicrap, Jimmy Ba, and Mohammad Norouzi. Dream to control: Learning behaviors by latent imagination, 2020. URL: [https://arxiv.org/abs/1912.01603](https://arxiv.org/abs/1912.01603). | * Hafner et al. (2020) Danijar Hafner, Timothy Lillicrap, Jimmy Ba, and Mohammad Norouzi. Dream to control: Learning behaviors by latent imagination, 2020. URL: [https://arxiv.org/abs/1912.01603](https://arxiv.org/abs/1912.01603). | ||

* Ho & Salimans (2022) Jonathan Ho and Tim Salimans. Classifier-free diffusion guidance, 2022. URL: [https://arxiv.org/abs/2207.12598](https://arxiv.org/abs/2207.12598). | * Ho & Salimans (2022) Jonathan Ho and Tim Salimans. Classifier-free diffusion guidance, 2022. URL: [https://arxiv.org/abs/2207.12598](https://arxiv.org/abs/2207.12598). | ||

* Ho et al. (2021) Jonathan Ho, Chitwan Saharia, William Chan, David J Fleet, Mohammad Norouzi, and Tim Salimans. Cascaded diffusion models for high fidelity image generation. ''arXiv preprint arXiv:2106.15282'', 2021. | * Ho et al. (2021) Jonathan Ho, Chitwan Saharia, William Chan, David J Fleet, Mohammad Norouzi, and Tim Salimans. Cascaded diffusion models for high fidelity image generation. ''arXiv preprint arXiv:2106.15282'', 2021. | ||

* Ho et al. (2022) Jonathan Ho, William Chan, Chitwan Saharia, Jay Whang, Ruiqi Gao, Alexey A. Gritsenko, Diederik P. Kingma, Ben Poole, Mohammad Norouzi, David J. Fleet, and Tim Salimans. Imagen video: High definition video generation with diffusion models. ''ArXiv'', abs/2210.02303, 2022. URL: [https://api.semanticscholar.org/CorpusID:252715883](https://api.semanticscholar.org/CorpusID:252715883). | * Ho et al. (2022) Jonathan Ho, William Chan, Chitwan Saharia, Jay Whang, Ruiqi Gao, Alexey A. Gritsenko, Diederik P. Kingma, Ben Poole, Mohammad Norouzi, David J. Fleet, and Tim Salimans. Imagen video: High definition video generation with diffusion models. ''ArXiv'', abs/2210.02303, 2022. URL: [https://api.semanticscholar.org/CorpusID:252715883](https://api.semanticscholar.org/CorpusID:252715883). | ||

* Kerbl et al. (2023) Bernhard Kerbl, Georgios Kopanas, Thomas Leimkühler, and George Drettakis. 3d gaussian splatting for real-time radiance field rendering. ''ACM Transactions on Graphics'', 42(4), July 2023. URL: [https://repo-sam.inria.fr/fungraph/3d-gaussian-splatting/](https://repo-sam.inria.fr/fungraph/3d-gaussian-splatting/). | * Kerbl et al. (2023) Bernhard Kerbl, Georgios Kopanas, Thomas Leimkühler, and George Drettakis. 3d gaussian splatting for real-time radiance field rendering. ''ACM Transactions on Graphics'', 42(4), July 2023. URL: [https://repo-sam.inria.fr/fungraph/3d-gaussian-splatting/](https://repo-sam.inria.fr/fungraph/3d-gaussian-splatting/). | ||

* Kim et al. (2020) Seung Wook Kim, Yuhao Zhou, Jonah Philion, Antonio Torralba, and Sanja Fidler. Learning to Simulate Dynamic Environments with GameGAN. In ''IEEE Conference on Computer Vision and Pattern Recognition (CVPR)'', Jun. 2020. | * Kim et al. (2020) Seung Wook Kim, Yuhao Zhou, Jonah Philion, Antonio Torralba, and Sanja Fidler. Learning to Simulate Dynamic Environments with GameGAN. In ''IEEE Conference on Computer Vision and Pattern Recognition (CVPR)'', Jun. 2020. | ||

* Kingma & Welling (2014) Diederik P. Kingma and Max Welling. Auto-Encoding Variational Bayes. In ''2nd International Conference on Learning Representations, ICLR 2014, Banff, AB, Canada, April 14-16, 2014, Conference Track Proceedings'', 2014. | * Kingma & Welling (2014) Diederik P. Kingma and Max Welling. Auto-Encoding Variational Bayes. In ''2nd International Conference on Learning Representations, ICLR 2014, Banff, AB, Canada, April 14-16, 2014, Conference Track Proceedings'', 2014. | ||

* Menapace et al. (2021) Willi Menapace, Stéphane Lathuilière, Sergey Tulyakov, Aliaksandr Siarohin, and Elisa Ricci. Playable video generation, 2021. URL: [https://arxiv.org/abs/2101.12195](https://arxiv.org/abs/2101.12195). | * Menapace et al. (2021) Willi Menapace, Stéphane Lathuilière, Sergey Tulyakov, Aliaksandr Siarohin, and Elisa Ricci. Playable video generation, 2021. URL: [https://arxiv.org/abs/2101.12195](https://arxiv.org/abs/2101.12195). | ||

* Menapace et al. (2024) Willi Menapace, Aliaksandr Siarohin, Stéphane Lathuilière, Panos Achlioptas, Vladislav Golyanik, Sergey Tulyakov, and Elisa Ricci. Promptable game models: Text-guided game simulation via masked diffusion models. ''ACM Transactions on Graphics'', 43(2):1–16, January 2024. doi: [10.1145/3635705](http://dx.doi.org/10.1145/3635705). | * Menapace et al. (2024) Willi Menapace, Aliaksandr Siarohin, Stéphane Lathuilière, Panos Achlioptas, Vladislav Golyanik, Sergey Tulyakov, and Elisa Ricci. Promptable game models: Text-guided game simulation via masked diffusion models. ''ACM Transactions on Graphics'', 43(2):1–16, January 2024. doi: [10.1145/3635705](http://dx.doi.org/10.1145/3635705). | ||

* Mildenhall et al. (2020) Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, and Ren Ng. Nerf: Representing scenes as neural radiance fields for view synthesis. In ''ECCV'', 2020. | * Mildenhall et al. (2020) Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, and Ren Ng. Nerf: Representing scenes as neural radiance fields for view synthesis. In ''ECCV'', 2020. | ||

* Mnih et al. (2015) Volodymyr Mnih, Koray Kavukcuoglu, David Silver, Andrei A. Rusu, Joel Veness, Marc G. Bellemare, Alex Graves, Martin A. Riedmiller, Andreas Kirkeby Fidjeland, Georg Ostrovski, Stig Petersen, Charlie Beattie, Amir Sadik, Ioannis Antonoglou, Helen King, Dharshan Kumaran, Daan Wierstra, Shane Legg, and Demis Hassabis. Human-level control through deep reinforcement learning. ''Nature'', 518:529–533, 2015. URL: [https://api.semanticscholar.org/CorpusID:205242740](https://api.semanticscholar.org/CorpusID:205242740). | * Mnih et al. (2015) Volodymyr Mnih, Koray Kavukcuoglu, David Silver, Andrei A. Rusu, Joel Veness, Marc G. Bellemare, Alex Graves, Martin A. Riedmiller, Andreas Kirkeby Fidjeland, Georg Ostrovski, Stig Petersen, Charlie Beattie, Amir Sadik, Ioannis Antonoglou, Helen King, Dharshan Kumaran, Daan Wierstra, Shane Legg, and Demis Hassabis. Human-level control through deep reinforcement learning. ''Nature'', 518:529–533, 2015. URL: [https://api.semanticscholar.org/CorpusID:205242740](https://api.semanticscholar.org/CorpusID:205242740). | ||

* Petric & Milinkovic (2018) Danko Petric and Marija Milinkovic. Comparison between cs and jpeg in terms of image compression, 2018. URL: [https://arxiv.org/abs/1802.05114](https://arxiv.org/abs/1802.05114). | * Petric & Milinkovic (2018) Danko Petric and Marija Milinkovic. Comparison between cs and jpeg in terms of image compression, 2018. URL: [https://arxiv.org/abs/1802.05114](https://arxiv.org/abs/1802.05114). | ||

* Podell et al. (2023) Dustin Podell, Zion English, Kyle Lacey, Andreas Blattmann, Tim Dockhorn, Jonas Müller, Joe Penna, and Robin Rombach. Sdxl: Improving latent diffusion models for high-resolution image synthesis. ''arXiv preprint arXiv:2307.01952'', 2023. | * Podell et al. (2023) Dustin Podell, Zion English, Kyle Lacey, Andreas Blattmann, Tim Dockhorn, Jonas Müller, Joe Penna, and Robin Rombach. Sdxl: Improving latent diffusion models for high-resolution image synthesis. ''arXiv preprint arXiv:2307.01952'', 2023. | ||

* Raffin et al. (2021) Antonin Raffin, Ashley Hill, Adam Gleave, Anssi Kanervisto, Maximilian Ernestus, and Noah Dormann. Stable-baselines3: Reliable reinforcement learning implementations. ''Journal of Machine Learning Research'', 22(268):1–8, 2021. URL: [http://jmlr.org/papers/v22/20-1364.html](http://jmlr.org/papers/v22/20-1364.html). | * Raffin et al. (2021) Antonin Raffin, Ashley Hill, Adam Gleave, Anssi Kanervisto, Maximilian Ernestus, and Noah Dormann. Stable-baselines3: Reliable reinforcement learning implementations. ''Journal of Machine Learning Research'', 22(268):1–8, 2021. URL: [http://jmlr.org/papers/v22/20-1364.html](http://jmlr.org/papers/v22/20-1364.html). | ||

* Ramesh et al. (2022) Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, and Mark Chen. Hierarchical text-conditional image generation with clip latents. ''arXiv preprint arXiv:2204.06125'', 2022. | * Ramesh et al. (2022) Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, and Mark Chen. Hierarchical text-conditional image generation with clip latents. ''arXiv preprint arXiv:2204.06125'', 2022. | ||

* Rombach et al. (2022) Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. High-resolution image synthesis with latent diffusion models. In ''Proceedings of the IEEE/CVF conference on computer vision and pattern recognition'', pp. 10684–10695, 2022. | * Rombach et al. (2022) Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. High-resolution image synthesis with latent diffusion models. In ''Proceedings of the IEEE/CVF conference on computer vision and pattern recognition'', pp. 10684–10695, 2022. | ||

* Saharia et al. (2022) Chitwan Saharia, William Chan, Saurabh Saxena, Lala Li, Jay Whang, Emily L Denton, Kamyar Ghasemipour, Raphael Gontijo Lopes, Burcu Karagol Ayan, Tim Salimans, et al. Photorealistic text-to-image diffusion models with deep language understanding. ''Advances in Neural Information Processing Systems'', 35:36479–36494, 2022. | * Saharia et al. (2022) Chitwan Saharia, William Chan, Saurabh Saxena, Lala Li, Jay Whang, Emily L Denton, Kamyar Ghasemipour, Raphael Gontijo Lopes, Burcu Karagol Ayan, Tim Salimans, et al. Photorealistic text-to-image diffusion models with deep language understanding. ''Advances in Neural Information Processing Systems'', 35:36479–36494, 2022. | ||

* Salimans & Ho (2022a) Tim Salimans and Jonathan Ho. Progressive distillation for fast sampling of diffusion models. In ''The Tenth International Conference on Learning Representations, ICLR 2022, Virtual Event, April 25-29, 2022''. OpenReview.net, 2022a. URL: [https://openreview.net/forum?id=TIdIXIpzhoI](https://openreview.net/forum?id=TIdIXIpzhoI). | * Salimans & Ho (2022a) Tim Salimans and Jonathan Ho. Progressive distillation for fast sampling of diffusion models. In ''The Tenth International Conference on Learning Representations, ICLR 2022, Virtual Event, April 25-29, 2022''. OpenReview.net, 2022a. URL: [https://openreview.net/forum?id=TIdIXIpzhoI](https://openreview.net/forum?id=TIdIXIpzhoI). | ||

* Salimans & Ho (2022b) Tim Salimans and Jonathan Ho. Progressive distillation for fast sampling of diffusion models, 2022b. URL: [https://arxiv.org/abs/2202.00512](https://arxiv.org/abs/2202.00512). | * Salimans & Ho (2022b) Tim Salimans and Jonathan Ho. Progressive distillation for fast sampling of diffusion models, 2022b. URL: [https://arxiv.org/abs/2202.00512](https://arxiv.org/abs/2202.00512). | ||

* Schulman et al. (2017) John Schulman, Filip Wolski, Prafulla Dhariwal, Alec Radford, and Oleg Klimov. Proximal policy optimization algorithms. ''CoRR'', abs/1707.06347, 2017. URL: [http://arxiv.org/abs/1707.06347](http://arxiv.org/abs/1707.06347). | * Schulman et al. (2017) John Schulman, Filip Wolski, Prafulla Dhariwal, Alec Radford, and Oleg Klimov. Proximal policy optimization algorithms. ''CoRR'', abs/1707.06347, 2017. URL: [http://arxiv.org/abs/1707.06347](http://arxiv.org/abs/1707.06347). | ||

* Shazeer & Stern (2018) Noam Shazeer and Mitchell Stern. Adafactor: Adaptive learning rates with sublinear memory cost. ''CoRR'', abs/1804.04235, 2018. URL: [http://arxiv.org/abs/1804.04235](http://arxiv.org/abs/1804.04235). | * Shazeer & Stern (2018) Noam Shazeer and Mitchell Stern. Adafactor: Adaptive learning rates with sublinear memory cost. ''CoRR'', abs/1804.04235, 2018. URL: [http://arxiv.org/abs/1804.04235](http://arxiv.org/abs/1804.04235). | ||

* Shirley & Morley (2008) P. Shirley and R.K. Morley. ''Realistic Ray Tracing, Second Edition''. Taylor & Francis, 2008. ISBN 9781568814612. URL: [https://books.google.ch/books?id=knpN6mnhJ8QC](https://books.google.ch/books?id=knpN6mnhJ8QC). | * Shirley & Morley (2008) P. Shirley and R.K. Morley. ''Realistic Ray Tracing, Second Edition''. Taylor & Francis, 2008. ISBN 9781568814612. URL: [https://books.google.ch/books?id=knpN6mnhJ8QC](https://books.google.ch/books?id=knpN6mnhJ8QC). | ||

* Song et al. (2020) Jiaming Song, Chenlin Meng, and Stefano Ermon. Denoising diffusion implicit models. ''arXiv:2010.02502'', October 2020. URL: [https://arxiv.org/abs/2010.02502](https://arxiv.org/abs/2010.02502). | * Song et al. (2020) Jiaming Song, Chenlin Meng, and Stefano Ermon. Denoising diffusion implicit models. ''arXiv:2010.02502'', October 2020. URL: [https://arxiv.org/abs/2010.02502](https://arxiv.org/abs/2010.02502). | ||

* Song et al. (2022) Jiaming Song, Chenlin Meng, and Stefano Ermon. Denoising diffusion implicit models, 2022. URL: [https://arxiv.org/abs/2010.02502](https://arxiv.org/abs/2010.02502). | * Song et al. (2022) Jiaming Song, Chenlin Meng, and Stefano Ermon. Denoising diffusion implicit models, 2022. URL: [https://arxiv.org/abs/2010.02502](https://arxiv.org/abs/2010.02502). | ||

* Unterthiner et al. (2019) Thomas Unterthiner, Sjoerd van Steenkiste, Karol Kurach, Raphaël Marinier, Marcin Michalski, and Sylvain Gelly. FVD: A new metric for video generation. In ''Deep Generative Models for Highly Structured Data, ICLR 2019 Workshop, New Orleans, Louisiana, United States, May 6, 2019'', 2019. | * Unterthiner et al. (2019) Thomas Unterthiner, Sjoerd van Steenkiste, Karol Kurach, Raphaël Marinier, Marcin Michalski, and Sylvain Gelly. FVD: A new metric for video generation. In ''Deep Generative Models for Highly Structured Data, ICLR 2019 Workshop, New Orleans, Louisiana, United States, May 6, 2019'', 2019. | ||

* Wang et al. (2023) Zhengyi Wang, Cheng Lu, Yikai Wang, Fan Bao, Chongxuan Li, Hang Su, and Jun Zhu. Prolificdreamer: High-fidelity and diverse text-to-3d generation with variational score distillation. ''arXiv preprint arXiv:2305.16213'', 2023. | * Wang et al. (2023) Zhengyi Wang, Cheng Lu, Yikai Wang, Fan Bao, Chongxuan Li, Hang Su, and Jun Zhu. Prolificdreamer: High-fidelity and diverse text-to-3d generation with variational score distillation. ''arXiv preprint arXiv:2305.16213'', 2023. | ||

* Wydmuch et al. (2019) Marek Wydmuch, Michał Kempka, and Wojciech Jaśkowski. ViZDoom Competitions: Playing Doom from Pixels. ''IEEE Transactions on Games'', 11(3):248–259, 2019. doi: [10.1109/TG.2018.2877047](http://dx.doi.org/10.1109/TG.2018.2877047). | * Wydmuch et al. (2019) Marek Wydmuch, Michał Kempka, and Wojciech Jaśkowski. ViZDoom Competitions: Playing Doom from Pixels. ''IEEE Transactions on Games'', 11(3):248–259, 2019. doi: [10.1109/TG.2018.2877047](http://dx.doi.org/10.1109/TG.2018.2877047). | ||

* Yang et al. (2023) Mengjiao Yang, Yilun Du, Kamyar Ghasemipour, Jonathan Tompson, Dale Schuurmans, and Pieter Abbeel. Learning interactive real-world simulators. ''arXiv preprint arXiv:2310.06114'', 2023. | * Yang et al. (2023) Mengjiao Yang, Yilun Du, Kamyar Ghasemipour, Jonathan Tompson, Dale Schuurmans, and Pieter Abbeel. Learning interactive real-world simulators. ''arXiv preprint arXiv:2310.06114'', 2023. | ||

* Yin et al. (2024) Tianwei Yin, Michaël Gharbi, Richard Zhang, Eli Shechtman, Frédo Durand, William T Freeman, and Taesung Park. One-step diffusion with distribution matching distillation. In ''CVPR'', 2024. | * Yin et al. (2024) Tianwei Yin, Michaël Gharbi, Richard Zhang, Eli Shechtman, Frédo Durand, William T Freeman, and Taesung Park. One-step diffusion with distribution matching distillation. In ''CVPR'', 2024. | ||

* Zhang et al. (2018) Richard Zhang, Phillip Isola, Alexei A. Efros, Eli Shechtman, and Oliver Wang. The unreasonable effectiveness of deep features as a perceptual metric. In ''CVPR'', 2018. | * Zhang et al. (2018) Richard Zhang, Phillip Isola, Alexei A. Efros, Eli Shechtman, and Oliver Wang. The unreasonable effectiveness of deep features as a perceptual metric. In ''CVPR'', 2018. | ||

== Appendix A Appendix == | == Appendix A Appendix == | ||

=== A.1 Samples === | === A.1 Samples === | ||

Figs. [8](https://arxiv.org/html/2408.14837v1#A1.F8), [9](https://arxiv.org/html/2408.14837v1#A1.F9), [10](https://arxiv.org/html/2408.14837v1#A1.F10), [11](https://arxiv.org/html/2408.14837v1#A1.F11) provide selected samples from GameNGen. | Figs. [8](https://arxiv.org/html/2408.14837v1#A1.F8), [9](https://arxiv.org/html/2408.14837v1#A1.F9), [10](https://arxiv.org/html/2408.14837v1#A1.F10), [11](https://arxiv.org/html/2408.14837v1#A1.F11) provide selected samples from GameNGen. | ||

[[File:2408.14837.figure.8.png|center|thumb|800x800px|Auto-regressive evaluation of the simulation model: Sample #1. Top row: Context frames. Middle row: Ground truth frames. Bottom row: Model predictions.]] | [[File:2408.14837.figure.8.png|center|thumb|800x800px|Auto-regressive evaluation of the simulation model: Sample #1. Top row: Context frames. Middle row: Ground truth frames. Bottom row: Model predictions.]] | ||

Latest revision as of 03:11, 9 September 2024

作者: Dani Valevski(谷歌研究)、Yaniv Leviathan(谷歌研究)、Moab Arar(特拉维夫大学)、Shlomi Fruchter(谷歌 DeepMind)

ArXiv链接: https://arxiv.org/abs/2408.14837

项目网站: https://gamengen.github.io

摘要

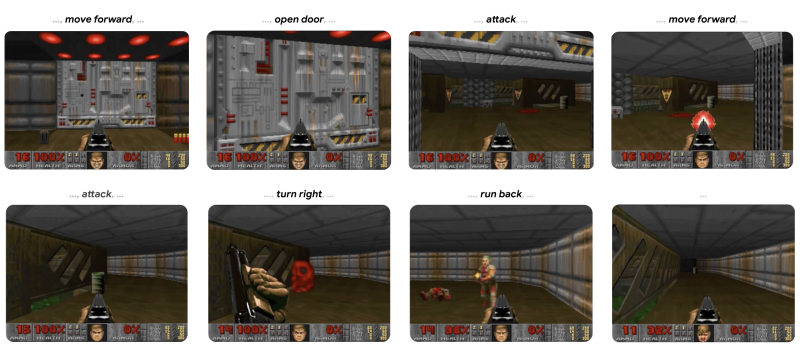

我们介绍了GameNGen,这是第一个完全由神经模型驱动的游戏引擎,能够在长轨迹上与复杂环境进行高质量的实时交互。GameNGen 可以在单个 TPU 上以每秒超过 20 帧的速度交互模拟经典游戏 DOOM。下一帧预测的 PSNR 为 29.4,与有损 JPEG 压缩相当。在区分游戏短片和模拟片段方面,人类评分员的表现仅略好于随机概率。GameNGen 的训练分为两个阶段:(1) 一个强化学习代理学习玩游戏,并记录训练过程;(2) 训练一个扩散模型,以过去的帧和动作序列为条件生成下一帧。条件增强技术可在长轨迹上实现稳定的自动回归生成。

请参见 https://gamengen.github.io 获取演示视频。

1 介绍

计算机游戏是围绕以下“游戏循环”手动制作的软件系统:(1) 收集用户输入,(2) 更新游戏状态,(3) 将其渲染为屏幕像素。这个游戏循环以很高的帧率运行,为玩家营造出一个交互式虚拟世界的假象。这种游戏循环通常在标准计算机上运行,尽管也有许多在定制硬件上运行游戏的惊人尝试(例如,标志性游戏《毁灭战士》曾在烤面包机、微波炉、跑步机、照相机、iPod 上运行,甚至在 Minecraft 游戏中运行——仅举几例,请参见 https://www.reddit.com/r/itrunsdoom/ ),但在所有这些情况下,硬件仍然是直接模拟手动编写的游戏软件。此外,尽管游戏引擎千差万别,但所有引擎中的游戏状态更新和渲染逻辑都是由一套手动编程或配置的规则组成的。

近年来,生成模型在根据文本或图像等多模态输入生成图像和视频方面取得了重大进展。在这一浪潮的前沿,扩散模型成为非语言媒体生成的事实标准,如 Dall-E(Ramesh 等人,2022)、Stable Diffusion(Rombach 等人,2022)和 Sora(Brooks 等人,2024)。乍一看,模拟视频游戏的交互世界似乎与视频生成类似。然而,"交互式"世界模拟不仅仅是快速生成视频。因为生成过程中需要以输入动作流为条件,而输入动作流只能在生成时获取,这打破了现有扩散模型架构的一些假设。尤其是,它要求自回归地生成帧,这往往是不稳定的,并导致采样发散(见 3.2.1 节)。

有几项重要研究(Ha & Schmidhuber,2018;Kim 等人,2020;Bruce 等人,2024)(见第6节)使用神经模型来模拟交互式视频游戏。然而,这些方法大多在模拟游戏的复杂性、仿真速度、长时间的稳定性或视觉质量等方面存在局限性(见图2)。因此,自然而然地会问:

一个实时运行的神经模型是否能够以高质量模拟复杂的游戏?

在这项工作中,我们证明答案是肯定的。具体来说,我们展示了一款复杂的视频游戏——标志性游戏《DOOM》,可以在神经网络(开放式 Stable Diffusion v1.4 的增强版(Rombach 等人,2022))上实时运行,同时获得与原始游戏相当的视觉质量。尽管这不是精确仿真,该神经模型能够执行复杂的游戏状态更新,例如统计生命值和弹药、攻击敌人、破坏物体、开门,以及在长轨迹上持续保持游戏状态。

GameNGen 回答了在通往游戏引擎新范式的道路上一个重要的问题,即游戏可以自动生成,就像近年来神经模型生成图像和视频一样。仍然存在关键问题,例如如何训练这些神经游戏引擎,以及如何有效地创建游戏,包括如何最佳地利用人类输入。尽管如此,我们对这种新范式的可能性感到非常兴奋。

2 互动世界仿真

一个交互环境Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{E}} 由一个潜在状态空间Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{S}} 、一个潜在空间的部分投影空间Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{O}} 、一个部分投影函数Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle V: \mathcal{S} \rightarrow \mathcal{O}} 、一组动作Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{A}} ,以及一个转移概率函数Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle p \left( s^{\prime} \,|\, a, s \right)} ,使得Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s, s^{\prime} \in \mathcal{S}, a\in \mathcal{A}} 。

例如,在游戏 DOOM 中,Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{S}} 是程序的动态内存内容,Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{O}} 是渲染的屏幕像素,Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle V} 是游戏的渲染逻辑,Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{A}} 是按键和鼠标移动的集合,而 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle p} 是基于玩家输入的程序逻辑(包括任何潜在的非确定性)。

给定输入交互环境 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{E}} 和初始状态 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle s_{0} \in \mathcal{S}} ,一个“交互世界模拟”是一个“模拟分布函数” Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle q \left( o_{n} \,|\, \{o_{< n}, a_{\leq n}\} \right), \; o_{i} \in \mathcal{O}, \; a_{i} \in \mathcal{A}} 。给定观测值之间的距离度量 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle D: \mathcal{O} \times \mathcal{O} \rightarrow \mathbb{R}} ,一个“策略”,即给定过去动作和观测的代理动作分布 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \pi \left( a_{n} \,|\, o_{< n}, a_{< n} \right)} ,初始状态分布 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle S_{0}} 和回合长度分布 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle N_{0}} ,交互世界模拟的目标是最小化 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle E \left( D \left( o_{q}^{i}, o_{p}^{i} \right) \right)} ,其中 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle n \sim N_{0}} ,Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0 \leq i \leq n} ,以及 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle o_{q}^{i} \sim q, \; o_{p}^{i} \sim V(p)} 是在执行代理策略 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \pi} 时从环境和模拟中抽取的观测值。重要的是,这些样本的条件动作总是通过代理与环境 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{E}} 交互获得,而条件观测既可以从 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{E}} 获得(“教师强迫目标”),也可以从模拟中获得(“自回归目标”)。

我们总是使用教师强迫目标来训练我们的生成模型。给定一个模拟分布函数 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle q} ,可以通过自回归地采样观测值来模拟环境 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{E}} 。

3 GameNGen

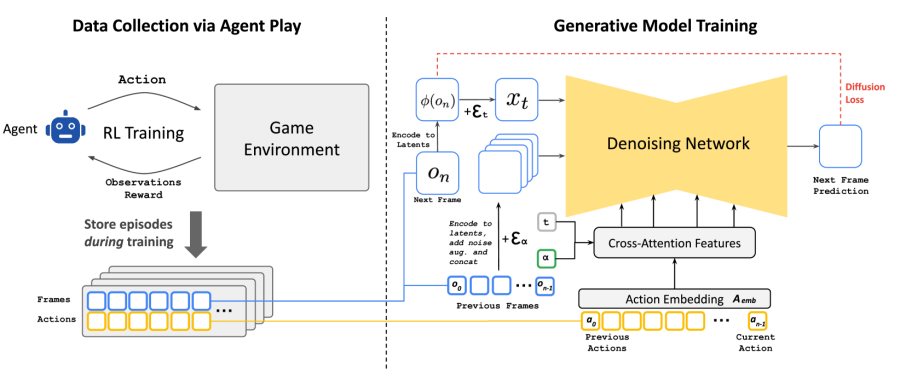

GameNGen(发音为“游戏引擎”)是一个生成扩散模型,它能够在第2节的设置下学习模拟游戏。为了收集该模型的训练数据,我们首先使用教师强制目标训练一个独立的模型与环境进行交互。这两个模型(代理和生成模型)依次进行训练。在训练过程中,代理的全部行为和观察语料 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{T}_{agent}} 被保留下来,并在第二阶段成为生成模型的训练数据集。见图 3。

3.1 通过代理进行数据收集

我们的最终目标是让人类玩家与我们的仿真进行互动。为此,第2节中的策略Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \pi} 即为“人类游戏策略”。由于我们无法直接大规模地从中取样,因此我们首先通过教一个自动代理来玩游戏,以此来近似人类游戏。与典型的强化学习设置不同,该设置旨在最大化游戏得分,我们的目标是生成与人类游戏类似的训练数据,或者至少在各种场景下包含足够多的多样化示例,以最大化训练数据的效率。为此,我们设计了一个简单的奖励函数,这是我们的方法中唯一与环境相关的部分(见附录A.3)。

我们在整个训练过程中记录了代理的训练轨迹,其中涵盖了不同技能水平的游戏。这组记录的轨迹构成了我们的Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{T}_{agent}} 数据集,用于训练生成模型(见第3.2节)。

3.2 训练生成扩散模型

现在,我们训练一个生成扩散模型,该模型以在前一阶段收集的代理轨迹Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{T}_{agent}} (行动和观察)作为条件。

我们重新利用预训练的文本到图像扩散模型 Stable Diffusion v1.4(Rombach 等人,2022)。我们将模型 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f_{\theta}} 置于轨迹 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle T \sim \mathcal{T}_{agent}} 的条件下,即在之前的动作 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle a_{< n}} 和观察(帧) Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle o_{< n}} 的序列条件下,并移除所有文本条件。具体来说,为了以动作为条件,我们仅需学习将每个动作(例如按下特定按键)嵌入为单个标记的 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle A_{emb}} ,并将文本的交叉注意力替换为该编码动作序列。为了对观察(即之前的帧)进行条件化,我们使用自动编码器 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \phi} 将它们编码到潜在空间中,并在潜在通道维度中将它们串联到噪声潜在空间中(见图 3)。我们还尝试通过交叉注意力对这些过去的观察进行条件化,但没有观察到有意义的改进。

我们通过速度参数化训练模型,使得扩散损失最小化(Salimans & Ho, 2022b):

Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathcal{L} = {{\mathbb{E}}_{t,\epsilon,T}\left\lbrack {\|{{v{(\epsilon,x_{0},t)}} - {v_{\theta^{\prime}}{(x_{t},t,{\{{\phi{(o_{i < n})}}\}},{\{{A_{emb}{(a_{i < n})}}\}})}}}\|}_{2}^{2} \right\rbrack}} (1)

其中 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle T = {\{ o_{i \leq n},a_{i \leq n}\}} \sim \mathcal{T}_{agent}} ,Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_{0} = \phi{(o_{n})}} ,Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle t \sim \mathcal{U}{(0,1)}} ,Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \epsilon \sim \mathcal{N}{(0,\mathbf{I})}} ,Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle x_{t} = {\sqrt{\overline{\alpha}_{t}}x_{0} + \sqrt{1 - \overline{\alpha}_{t}}\epsilon}} ,Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle v{(\epsilon,x_{0},t)} = {\sqrt{\overline{\alpha}_{t}}\epsilon - \sqrt{1 - \overline{\alpha}_{t}}x_{0}}} ,而 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle v_{\theta^{\prime}}} 是模型 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle f_{\theta}} 的 v预测输出。噪声调度 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \overline{\alpha}_{t}} 是线性的,与 Rombach 等(2022)类似。

3.2.1 使用噪声增强缓解自回归漂移

如图4所示,教师强制训练和自动回归采样之间的领域偏移会导致误差积累和采样质量的快速下降。为了避免由于模型的自动回归应用而导致的这种偏差,我们在训练时向编码帧中添加不同程度的高斯噪声来扰动背景帧,并将噪声水平作为输入提供给模型,仿效 Ho 等人(2021)的方法。为此,我们对噪声水平 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha} 进行均匀采样,直至最大值,然后对其进行离散化,并为每个区间学习一个嵌入(见图3)。这使得网络能够纠正前几帧中的采样信息,对于长期保持帧质量至关重要。在推理过程中,可以控制添加的噪声水平以最大化质量,尽管我们发现,即使不添加噪声,结果也显著改善。我们将在5.2.2部分分析这种方法的影响。

3.2.2 潜在变量解码器微调

Stable Diffusion v1.4 的预训练自动编码器将 8x8 像素块压缩为 4 个潜通道,在预测游戏帧时会导致有意义的伪影,影响小细节,尤其是底栏 HUD(“抬头显示”)。为了在提高图像质量的同时利用预训练的知识,我们仅使用针对目标帧像素计算的 MSE 损失来训练潜在自动编码器的解码器。使用 LPIPS(Zhang 等人(2018))等感知损失可能会进一步提高质量,我们将其留待未来工作中研究。重要的是,请注意这个微调过程完全独立于 U-Net 微调过程,而且自回归生成不受其影响(我们仅对潜变量自回归地进行条件设置,而非像素)。附录 A.2 展示了对自动编码器进行微调和不进行微调的生成示例。

3.3 推理

3.3.1 设置

我们使用DDIM采样(Song等人,2022)。我们仅对过去观测条件Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle o_{< n}} 采用了无分类器指导(Ho & Salimans,2022)。我们发现对过去动作条件Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle a_{< n}} 的指导无法提高质量。我们使用的权重相对较小(1.5),因为较大的权重会产生伪影,而我们的自动回归采样则会放大这些伪影。

我们还尝试了同时生成 4 个样本并合并结果,希望能防止罕见的极端预测被采纳,并减少误差累积。我们尝试了对样本进行平均和选择最接近中位数的样本。平均效果略逊于单帧,而选择最接近中位数的样本效果仅略有提升。由于这两种方法都会将硬件需求提高到 4 个张量处理单元(TPU),因此我们决定不使用这些方法,但注意到这可能是未来研究的一个有趣领域。

3.3.2 去噪器采样步骤

在推理过程中,我们需要运行 U-Net 去噪器(进行若干步)和自动编码器。在我们的硬件配置(TPU-v5)下,一次去噪步骤和自动编码器的评估各需 10 毫秒。如果我们以单步去噪器运行模型,设置中的最小总延迟为每帧 20 毫秒,即每秒 50 帧。通常情况下,生成扩散模型(如 Stable Diffusion)通过单次去噪步骤无法产生高质量结果,而是需要数十个采样步骤才能生成高质量图像。令人惊讶的是,我们发现只需 4 个 DDIM 采样步骤,就能稳健地模拟 DOOM(Song 等人,2020)。实际上,我们观察到使用 4 步采样与使用 20 步或更多步采样相比,模拟质量没有下降(见附录 A.4)。

仅使用 4 个去噪步骤导致 U-Net 总耗时为 40 毫秒(包括自动编码器的推理总耗时为 50 毫秒),即每秒 20 帧。我们推测,在我们的案例中,较少步骤对质量影响可忽略不计,是由于以下因素的结合:(1) 受限的图像空间,以及 (2) 前一帧的强条件作用。

由于我们在使用单步采样时确实观察到了质量下降,因此我们在单步设置中进行了类似于(Yin 等人,2024;Wang 等人,2023)的模型蒸馏实验。蒸馏确实有很大帮助(使我们达到了上述的 50 FPS),但仍会对仿真质量造成一定影响,因此我们选择在我们的方法中使用不带蒸馏的 4 步版本(见附录 A.4)。这是一个值得进一步研究的有趣领域。

我们注意到,类似于 NVidia 的经典 SLI 交替帧渲染(AFR)技术,通过在额外硬件上并行生成多个帧,可以显著提高图像生成速率。然而,与 AFR 类似,实际的仿真速率不会提高,输入延迟也不会减少。

4 实验设置

4.1 代理训练

代理模型使用 PPO(Schulman 等人,2017)进行训练,采用简单的 CNN 作为特征网络,基于 Mnih 等人(2015)的方法。在 CPU 上使用 Stable Baselines 3 基础架构(Raffin 等人,2021)进行训练。代理接收缩小后的帧图像和游戏地图,每个分辨率为 160x120。代理还可以访问其最近执行的 32 次动作。特征网络为每幅图像计算出大小为 512 的表示。PPO 的 actor 和 critic 是基于图像特征网络输出和过去动作序列连接的两层 MLP 头。我们使用 Vizdoom 环境(Wydmuch 等人,2019)训练代理来玩游戏。我们并行运行了 8 个游戏,每个游戏的回放缓冲区大小为 512,折扣因子为 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \gamma = 0.99} ,熵系数为 Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.1} 。在每次迭代中,我们使用批量大小为 64 的数据进行 10 个时代的训练,学习率为 1e-4。我们总共执行了 1000 万个环境步骤。

4.2 生成模型训练

我们使用 Stable Diffusion 1.4 的预训练检查点训练所有仿真模型,解冻所有 U-Net 参数。我们使用的批量大小为 128,恒定学习率为 2e-5,采用无权重衰减的 Adafactor 优化器(Shazeer & Stern,2018),以及梯度剪切为 1.0。我们将扩散损失参数化更改为 v预测(Salimans & Ho 2022a)。我们以 0.1 的概率去掉上下文帧条件,以便在推理过程中使用 CFG。我们使用 128 台 TPU-v5e 设备进行数据并行化训练。除非另有说明,本文中的所有结果均为 700,000 步训练后的结果。对于噪声增强(第3.2.1节),我们使用的最大噪声水平为 0.7,并设有 10 个嵌入桶。在优化潜在解码器时,我们使用的批次大小为 2,048;其他训练参数与去噪器的参数相同。在训练数据方面,除非另有说明,我们使用了代理在强化学习训练期间的所有轨迹以及训练期间的评估数据。总体而言,我们生成了 9 亿帧用于训练。所有图像帧(在训练、推理和条件期间)的分辨率均为 320x240,并填充为 320x256。我们使用的上下文长度为 64(即向模型提供其自身的最后 64 次预测以及最后 64 次操作)。

5 结果

5.1 仿真质量

总体而言,从图像质量来看,我们的方法在长轨迹上实现了与原始游戏相当的仿真质量。对于短轨迹,人类评估者在区分仿真片段和实际游戏片段时,仅比随机猜测略胜一筹。

图像质量。 我们使用第2节中描述的教师强迫设置来测量LPIPS(Zhang 等人,2018)和PSNR。在该设置中,我们对初始状态进行采样,并根据地面实况的过去观察轨迹预测单帧。在对5个不同级别的2048条随机轨迹进行评估时,我们的模型实现了Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 29.43} 的PSNR值和Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.249} 的LPIPS值。PSNR值与质量设置为20-30的有损JPEG压缩相似(Petric & Milinkovic,2018)。图5展示了模型预测和相应地面实况样本的示例。

视频质量 我们使用第2节中描述的自回归设置,按照真实轨迹所定义的动作序列对帧进行迭代采样,同时将模型自身的过往预测作为条件。自回归采样时,预测轨迹和真实轨迹常常在几步后发生偏离,这主要是由于不同轨迹的帧间积累了少量不同的运动速度。因此,如图6所示,每帧的PSNR和LPIPS值分别逐渐降低和增加。预测的轨迹在内容和图像质量方面仍与实际游戏相似,但每帧指标在捕捉这一点的能力上有限(自动回归生成的轨迹样本见附录A.1)。

因此,我们对512个随机保留的轨迹计算FVD(Unterthiner等人,2019),测量预测轨迹分布与真实值轨迹分布之间的距离,仿真的长度为16帧(0.8秒)和32帧(1.6秒)。对于16帧,我们的模型获得的FVD为Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 114.02} 。对于32帧,我们的模型获得的FVD为Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 186.23} 。

人类评估。 作为评估仿真质量的另一项标准,我们向 10 名评测员提供了 130 个随机短片段(长度为 1.6 秒和 3.2 秒),并排展示我们的仿真和真实游戏。评测员的任务是识别真实游戏(见附录A.6中的图14)。评测员在 1.6 秒和 3.2 秒的片段中,选择真实游戏而非仿真的比例分别为 58% 和 60%。

5.2 消融实验

为了评估我们方法中不同组件的重要性,我们从评估数据集中采样轨迹,并计算真实值与预测帧之间的 LPIPS 和 PSNR 指标。

5.2.1 上下文长度

我们通过训练使用Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle N \in \{ 1,2,4,8,16,32,64\}} 的模型来评估改变条件上下文中过去观测值数量Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle N} 的影响(请注意,我们的方法使用Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle N = 64} )。这影响了历史帧和动作的数量。我们在解码器保持冻结的情况下训练模型200,000步,并在5个级别的测试集轨迹上进行评估。结果见表1。正如预期的那样,我们发现生成质量随着上下文长度的增加而提高。有趣的是,我们观察到,尽管最初(例如在1到2帧之间)提升较大,但很快就接近一个渐近线,进一步增加上下文长度只能带来微小的质量提升。这有些令人惊讶,因为即使在我们使用的最大上下文长度下,模型也只能访问略多于3秒的历史。值得注意的是,我们观察到大部分游戏状态会持续更长时间(见第7节)。虽然条件上下文长度是一个重要的限制,但表1提示我们可能需要改变模型的架构,以有效支持更长的上下文,并更好地选择过去的帧作为条件,这将是我们未来的工作。

表 1:历史帧数量。我们在来自 5 个级别的 8912 个测试集示例中分析了用作上下文的历史帧数量。更多的帧通常会改善 PSNR 和 LPIPS 指标。

| 历史上下文长度 | PSNR Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \uparrow} | LPIPS Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \downarrow} |

|---|---|---|

| 64 | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 22.36 \pm 0.033} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.295 \pm 0.001} |

| 32 | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 22.31 \pm 0.033} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.296 \pm 0.001} |

| 16 | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 22.28 \pm 0.033} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.296 \pm 0.001} |

| 8 | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 22.26 \pm 0.033} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.296 \pm 0.001} |

| 4 | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 22.26 \pm 0.034} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.298 \pm 0.001} |

| 2 | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 22.03 \pm 0.037} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.304 \pm 0.001} |

| 1 | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 20.94 \pm 0.044} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.358 \pm 0.001} |

5.2.2 噪声增强

为了消除噪声增强的影响,我们训练了一个不添加噪声的模型。我们对标准噪声增强模型和不添加噪声的模型(经过 200,000 步训练后)进行自回归评估,并计算在随机保留的 512 条轨迹上预测帧与真实帧之间的 PSNR 和 LPIPS 指标。我们在图 7 中报告了每个自回归步骤的平均指标值,最多可达 64 帧。

在没有噪声增强的情况下,与真实值相比,LPIPS 距离迅速增加,而 PSNR 下降,这表明仿真结果与真实值的偏差加大。

5.2.3 代理执行

我们将代理生成的数据训练与使用随机策略生成的数据训练进行比较。对于随机策略,我们根据与观测结果无关的均匀分类分布对动作进行采样。我们通过对两个模型及其解码器进行

总体而言,我们观察到在随机轨迹上训练模型的效果出奇地好,但受到随机策略探索能力的限制。在比较单帧生成时,代理的效果稍好,PSNR 为 25.06,而随机策略为 24.42。在比较 3 秒自回归生成后的帧时,差距增大到 19.02 对 16.84。在手动操作模型时,我们发现某些区域对两者都很容易,而某些区域对两者都很困难,而在某些区域,代理的表现要好得多。基于此,我们根据它们与游戏起始位置的距离手动将 456 个例子分为三组:易、中等和难。我们观察到,在简单和困难集上,代理的表现仅略优于随机,而在中等集上,正如预期的那样,代理的表现要好得多(见表 2)。请参见附录 A.5 中的图 13,了解人类单次游戏的得分情况。

表 2:不同难度级别的表现。 我们比较了使用代理生成数据和随机生成数据训练的模型在简单、中等和困难数据集上的表现。简单和中等数据集各有 112 个样本,困难数据集有 232 个样本。在 3 秒后的单帧上计算每个轨迹的指标。

| 难度级别 | 数据生成策略 | PSNR Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \uparrow} | LPIPS Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \downarrow} |

|---|---|---|---|

| 简单 | 代理 | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 20.94 \pm 0.76} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.48 \pm 0.01} |

| 随机 | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 20.20 \pm 0.83} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.48 \pm 0.01} | |

| 中等 | 代理 | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 20.21 \pm 0.36} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.50 \pm 0.01} |

| 随机 | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 16.50 \pm 0.41} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.59 \pm 0.01} | |

| 困难 | 代理 | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 17.51 \pm 0.35} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.60 \pm 0.01} |

| 随机 | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 15.39 \pm 0.43} | Failed to parse (SVG with PNG fallback (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 0.61 \pm 0.00} |

6 相关工作

交互式三维仿真

模拟二维和三维环境的视觉和物理过程,并允许对其进行交互式探索,是计算机图形学中一个广泛发展的领域(Akenine-Möller等人,2018)。像虚幻和Unity这样的游戏引擎是可以处理场景几何表示并根据用户交互渲染图像流的软件。游戏引擎负责跟踪所有世界状态,例如玩家的位置和移动、物体、角色动画和光照。它还负责跟踪游戏逻辑,例如完成游戏目标所获得的分数。电影和电视制作使用的光线追踪变体(Shirley和Morley,2008),对于实时应用来说过于缓慢且计算密集。相比之下,游戏引擎必须保持非常高的帧率(通常为30-60 FPS),因此依赖于高度优化的多边形光栅化,并且通常由GPU加速。诸如阴影、粒子和光照等物理效果通常使用高效的启发式方法来实现,而不是进行精确的物理仿真。

神经三维仿真

重建三维表示的神经方法在过去几年中取得了重大进展。NeRFs(Mildenhall 等人,2020)使用深度神经网络对辐射场进行参数化,该网络针对从不同相机姿态拍摄的一组图像的特定场景进行了专门优化。训练完成后,可通过体积渲染方法对场景的新视角进行采样。Gaussian Splatting(Kerbl 等人,2023)方法建立在 NeRFs 的基础上,但使用三维高斯和改进的光栅化方法来表示场景,从而实现更快的训练和渲染速度。尽管这些方法展示了令人印象深刻的重建结果和实时交互性,但通常仅限于静态场景。

视频扩散模型

扩散模型在文本到图像生成中取得了最先进的成果(Saharia 等人,2022;Rombach 等人,2022;Ramesh 等人,2022;Podell 等人,2023),这一研究领域也被应用于文本到视频生成任务(Ho 等人,2022;Blattmann 等人,2023b;a;Gupta 等人,2023;Girdhar 等人,2023;Bar-Tal 等人,2024)。尽管在逼真性、文本依从性和时间一致性方面取得了显著进展,但视频扩散模型对于实时应用来说仍然过于缓慢。我们的工作扩展了这一研究,并使其适用于基于过去观察和动作历史的自回归条件下的实时生成。

游戏模拟与世界模型

有几项研究试图利用动作输入来训练游戏仿真模型。Yang 等人(2023)建立了一个包含真实世界和模拟视频的多样化数据集,并训练了一个扩散模型,根据前一个视频片段和动作的文字描述来预测后续视频。Menapace 等人(2021)和 Bruce 等人(2024)专注于从视频中无监督地学习动作。Menapace 等人(2024)将文本提示转换为游戏状态,然后使用 NeRF 将其转换为三维表示。与这些研究不同,我们专注于“交互式可玩实时仿真”,并展示了长时间跨度轨迹的鲁棒性。我们利用强化学习代理探索游戏环境,并创建观察和交互的轨迹以训练我们的交互式游戏模型。另一项研究探索了学习环境的预测模型,并将其用于训练强化学习代理。Ha 和 Schmidhuber(2018)训练了变分自动编码器(Kingma & Welling,2014),将游戏帧编码为潜在向量,然后使用 RNN 模拟 VizDoom 游戏环境,从随机策略(即随机选择动作)的随机轨迹中进行训练。然后通过在“虚构”环境中进行游戏来学习控制器策略。Hafner 等人(2020)证明,强化学习代理可以完全在由潜在空间中的学习世界模型生成的情节上进行训练。与我们的工作也接近的是 Kim 等人(2020),他们使用 LSTM 架构来建模世界状态,同时结合卷积解码器生成输出帧,并在对抗性目标下联合训练。虽然这种方法对《吃豆人》等简单游戏似乎给出了合理的结果,但在模拟 VizDoom 的复杂环境时会产生模糊样本。相比之下,GameNGen 能够生成与原始游戏相当的样本;见图 2。最后,与我们的工作同步进行的还有 Alonso 等人(2024)训练的扩散世界模型,该模型可根据观察历史预测下一步观察,并在雅达利游戏上迭代训练世界模型和强化学习模型。

DOOM

DOOM 于 1993 年发布,掀起了游戏行业的一场革命。它引入了开创性的 3D 图形技术,成为第一人称射击类游戏的基石,影响了无数其他游戏。许多研究工作都对 DOOM 进行了研究。它提供了开放源码的实现和足够低的原生分辨率,适合小型模型的模拟,同时也足够复杂,可以作为一个具有挑战性的测试案例。最后,作者在这款游戏上花费了无数的青春时光,因此在这项工作中使用它是一个显而易见的选择。

7 讨论

总结。 我们介绍了GameNGen,并证明在神经模型上可以实现每秒20帧的高质量实时游戏。我们还提供了将计算机游戏等交互式软件转换为神经模型的方法。

局限性。 GameNGen 存在内存有限的情况。该模型只能访问稍超过3秒的历史记录,因此许多游戏逻辑能够在更长的时间跨度内被保存,这一点令人惊讶。虽然部分游戏状态是通过屏幕像素(如弹药和健康统计、可用武器等)来持久化的,但模型可能学习了强大的启发式方法,从而能够进行有意义的概括。例如,从渲染视图中,模型可以学习推断玩家的位置,而从弹药和健康统计中,模型可能推断玩家是否已经穿过某个区域并击败了那里的敌人。尽管如此,很容易出现上下文长度不足的情况。在现有架构下继续增加上下文大小只能带来微小的好处(第5.2.1节),模型的上下文长度偏短仍然是一个重要限制。第二个重要限制是代理的行为与人类玩家的行为之间仍然存在差异。例如,即使在训练结束后,我们的代理仍然无法探索游戏中的所有地点和互动,从而导致在这些情况下出现错误行为。

未来工作 我们在经典游戏《DOOM》上演示了GameNGen。在其他游戏或更广泛的交互式软件系统上进行测试会非常有趣。我们注意到,除了强化学习代理的奖励函数外,我们的技术没有任何内容是《DOOM》特有的。我们计划在未来的工作中解决这个问题。虽然GameNGen能够准确地维护游戏状态,但如上文所述,它并不完美。可能需要一个更复杂的架构来缓解这些问题。GameNGen目前只能利用有限的内存。尝试进一步有效地扩展内存对于更复杂的游戏或软件来说至关重要。GameNGen在TPUv5上的运行速度为20或50 FPS,比原始游戏《DOOM》当时在一些作者的80386机器上的运行速度还要快!在消费者硬件上尝试进一步的优化技术以提高帧率将是很有趣的。

迈向互动视频游戏的新范式 如今,视频游戏是由人类编程的。而GameNGen则是新范式的一部分概念验证,在这一新范式中,游戏被视为神经模型的权重,而不是代码行。GameNGen展示了一种架构和模型权重,使神经模型能够有效地在现有硬件上互动运行复杂的游戏(如 DOOM)。虽然仍有许多重要问题,但我们希望这种范式能带来显著益处。例如,在这种新范式下,视频游戏的开发过程可能成本更低且更易于获取,可以通过文字描述或示例图像来开发和编辑游戏。这个愿景的一小部分,即对现有游戏进行修改或创造新的行为,也许在短期内就能实现。例如,我们也许可以将一组帧转换成一个新的可玩关卡,或根据示例图像创建一个新角色,而无需编写代码。这种新范式的其他优点还包括对帧率和内存占用的强有力保证。我们尚未在这些方向上进行实验,并且还需要做更多的工作,但我们很高兴尝试!希望有朝一日,这一小步能为改善人们的视频游戏体验做出重大贡献,甚至更广泛地说,改善人们与互动软件系统的日常交互体验。

致谢

我们衷心感谢 Eyal Segalis、Eyal Molad、Matan Kalman、Nataniel Ruiz、Amir Hertz、Matan Cohen、Yossi Matias、Yael Pritch、Danny Lumen、Valerie Nygaard、Theta Labs 和 Google Research 团队以及我们的家人,感谢他们提供的深刻反馈、创意、建议和支持。

贡献

- Dani Valevski: 开发了大部分代码库,调整了整个系统的参数和细节,增加了自动编码器微调、代理训练和蒸馏功能。

- 亚尼夫·列维坦: 提出了项目、方法和架构,开发了初始实现,是实现和撰写的主要贡献者。

- Moab Arar: 领导了自回归稳定化与噪声增强,进行了许多消融实验,并创建了人类游戏数据的数据集。

- Shlomi Fruchter: 提出了项目、方法和架构。负责项目领导、使用 DOOM 的初始实现、主要手稿的撰写、评估指标、随机策略数据管道。

如需联系,请发送邮件至 shlomif@google.com 和 leviathan@google.com。

参考文献

- Akenine-Möller et al. (2018) Tomas Akenine-Möller, Eric Haines, and Naty Hoffman. Real-Time Rendering, Fourth Edition. A. K. Peters, Ltd., USA, 4th edition, 2018. ISBN 0134997832.

- Alonso et al. (2024) Eloi Alonso, Adam Jelley, Vincent Micheli, Anssi Kanervisto, Amos Storkey, Tim Pearce, and François Fleuret. Diffusion for world modeling: Visual details matter in Atari, 2024.

- Bar-Tal et al. (2024) Omer Bar-Tal, Hila Chefer, Omer Tov, Charles Herrmann, Roni Paiss, Shiran Zada, Ariel Ephrat, Junhwa Hur, Guanghui Liu, Amit Raj, Yuanzhen Li, Michael Rubinstein, Tomer Michaeli, Oliver Wang, Deqing Sun, Tali Dekel, and Inbar Mosseri. Lumiere: A space-time diffusion model for video generation, 2024. URL: [1](https://arxiv.org/abs/2401.12945).

- Blattmann et al. (2023a) Andreas Blattmann, Tim Dockhorn, Sumith Kulal, Daniel Mendelevitch, Maciej Kilian, Dominik Lorenz, Yam Levi, Zion English, Vikram Voleti, Adam Letts, Varun Jampani, and Robin Rombach. Stable video diffusion: Scaling latent video diffusion models to large datasets, 2023a. URL: [2](https://arxiv.org/abs/2311.15127).

- Blattmann et al. (2023b) Andreas Blattmann, Robin Rombach, Huan Ling, Tim Dockhorn, Seung Wook Kim, Sanja Fidler, and Karsten Kreis. Align your latents: High-resolution video synthesis with latent diffusion models, 2023b. URL: [3](https://arxiv.org/abs/2304.08818).

- Brooks et al. (2024) Tim Brooks, Bill Peebles, Connor Holmes, Will DePue, Yufei Guo, Li Jing, David Schnurr, Joe Taylor, Troy Luhman, Eric Luhman, Clarence Ng, Ricky Wang, and Aditya Ramesh. Video generation models as world simulators, 2024. URL: [4](https://openai.com/research/video-generation-models-as-world-simulators).

- Bruce et al. (2024) Jake Bruce, Michael Dennis, Ashley Edwards, Jack Parker-Holder, Yuge Shi, Edward Hughes, Matthew Lai, Aditi Mavalankar, Richie Steigerwald, Chris Apps, Yusuf Aytar, Sarah Bechtle, Feryal Behbahani, Stephanie Chan, Nicolas Heess, Lucy Gonzalez, Simon Osindero, Sherjil Ozair, Scott Reed, Jingwei Zhang, Konrad Zolna, Jeff Clune, Nando de Freitas, Satinder Singh, and Tim Rocktäschel. Genie: Generative interactive environments, 2024. URL: [5](https://arxiv.org/abs/2402.15391).

- Girdhar et al. (2023) Rohit Girdhar, Mannat Singh, Andrew Brown, Quentin Duval, Samaneh Azadi, Sai Saketh Rambhatla, Akbar Shah, Xi Yin, Devi Parikh, and Ishan Misra. Emu video: Factorizing text-to-video generation by explicit image conditioning, 2023. URL: [6](https://arxiv.org/abs/2311.10709).

- Gupta et al. (2023) Agrim Gupta, Lijun Yu, Kihyuk Sohn, Xiuye Gu, Meera Hahn, Li Fei-Fei, Irfan Essa, Lu Jiang, and José Lezama. Photorealistic video generation with diffusion models, 2023. URL: [7](https://arxiv.org/abs/2312.06662).

- Ha & Schmidhuber (2018) David Ha and Jürgen Schmidhuber. World models, 2018.

- Hafner et al. (2020) Danijar Hafner, Timothy Lillicrap, Jimmy Ba, and Mohammad Norouzi. Dream to control: Learning behaviors by latent imagination, 2020. URL: [8](https://arxiv.org/abs/1912.01603).

- Ho & Salimans (2022) Jonathan Ho and Tim Salimans. Classifier-free diffusion guidance, 2022. URL: [9](https://arxiv.org/abs/2207.12598).

- Ho et al. (2021) Jonathan Ho, Chitwan Saharia, William Chan, David J Fleet, Mohammad Norouzi, and Tim Salimans. Cascaded diffusion models for high fidelity image generation. arXiv preprint arXiv:2106.15282, 2021.

- Ho et al. (2022) Jonathan Ho, William Chan, Chitwan Saharia, Jay Whang, Ruiqi Gao, Alexey A. Gritsenko, Diederik P. Kingma, Ben Poole, Mohammad Norouzi, David J. Fleet, and Tim Salimans. Imagen video: High definition video generation with diffusion models. ArXiv, abs/2210.02303, 2022. URL: [10](https://api.semanticscholar.org/CorpusID:252715883).

- Kerbl et al. (2023) Bernhard Kerbl, Georgios Kopanas, Thomas Leimkühler, and George Drettakis. 3d gaussian splatting for real-time radiance field rendering. ACM Transactions on Graphics, 42(4), July 2023. URL: [11](https://repo-sam.inria.fr/fungraph/3d-gaussian-splatting/).

- Kim et al. (2020) Seung Wook Kim, Yuhao Zhou, Jonah Philion, Antonio Torralba, and Sanja Fidler. Learning to Simulate Dynamic Environments with GameGAN. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Jun. 2020.

- Kingma & Welling (2014) Diederik P. Kingma and Max Welling. Auto-Encoding Variational Bayes. In 2nd International Conference on Learning Representations, ICLR 2014, Banff, AB, Canada, April 14-16, 2014, Conference Track Proceedings, 2014.

- Menapace et al. (2021) Willi Menapace, Stéphane Lathuilière, Sergey Tulyakov, Aliaksandr Siarohin, and Elisa Ricci. Playable video generation, 2021. URL: [12](https://arxiv.org/abs/2101.12195).

- Menapace et al. (2024) Willi Menapace, Aliaksandr Siarohin, Stéphane Lathuilière, Panos Achlioptas, Vladislav Golyanik, Sergey Tulyakov, and Elisa Ricci. Promptable game models: Text-guided game simulation via masked diffusion models. ACM Transactions on Graphics, 43(2):1–16, January 2024. doi: [10.1145/3635705](http://dx.doi.org/10.1145/3635705).