Diffusion Models Are Real-Time Game Engines: Difference between revisions

No edit summary Tag: Reverted |

(Marked this version for translation) Tag: Reverted |

||

| Line 1: | Line 1: | ||

<languages /> | <languages /> | ||

<translate> | <translate> | ||

<!--T:1--> | |||

<!-- | <!-- | ||

'''Authors:''' Dani Valevski (Google Research), Yaniv Leviathan (Google Research), Moab Arar (Tel Aviv University), Shlomi Fruchter (Google DeepMind) | '''Authors:''' Dani Valevski (Google Research), Yaniv Leviathan (Google Research), Moab Arar (Tel Aviv University), Shlomi Fruchter (Google DeepMind) | ||

Revision as of 05:56, 1 September 2024

3 GameNGen

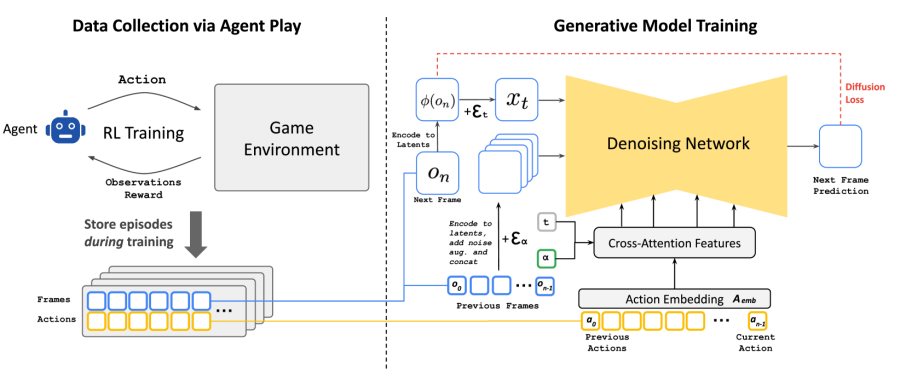

GameNGen (pronounced “game engine”) is a generative diffusion model that learns to simulate the game under the settings of Section 2. In order to collect training data for this model, with the teacher forcing objective, we first train a separate model to interact with the environment. The two models (agent and generative) are trained in sequence. The entirety of the agent’s actions and observations corpus during training is maintained and becomes the training dataset for the generative model in a second stage. See Figure 3.

3.1 Data Collection via Agent Play

Our end goal is to have human players interact with our simulation. To that end, the policy as in Section 2 is that of human gameplay. Since we cannot sample from that directly at scale, we start by approximating it via teaching an automatic agent to play. Unlike a typical RL setup which attempts to maximize game score, our goal is to generate training data which resembles human play, or at least contains enough diverse examples, in a variety of scenarios, to maximize training data efficiency. To that end, we design a simple reward function, which is the only part of our method that is environment-specific (see Appendix A.3).

We record the agent’s training trajectories throughout the entire training process, which includes different skill levels of play. This set of recorded trajectories is our dataset, used for training the generative model (see Section 3.2).

3.2 Training the Generative Diffusion Model

We now train a generative diffusion model conditioned on the agent’s trajectories (actions and observations) collected during the previous stage.

We re-purpose a pre-trained text-to-image diffusion model, Stable Diffusion v1.4 (Rombach et al., 2022). We condition the model on trajectories , i.e., on a sequence of previous actions and observations (frames) and remove all text conditioning. Specifically, to condition on actions, we simply learn an embedding from each action (e.g., a specific key press) into a single token and replace the cross attention from the text into this encoded actions sequence. In order to condition on observations (i.e., previous frames), we encode them into latent space using the auto-encoder and concatenate them in the latent channels dimension to the noised latents (see Figure 3). We also experimented conditioning on these past observations via cross-attention but observed no meaningful improvements.

We train the model to minimize the diffusion loss with velocity parameterization (Salimans & Ho, 2022b):

(1)

where , , , , , , and is the v-prediction output of the model . The noise schedule is linear, similarly to Rombach et al. (2022).

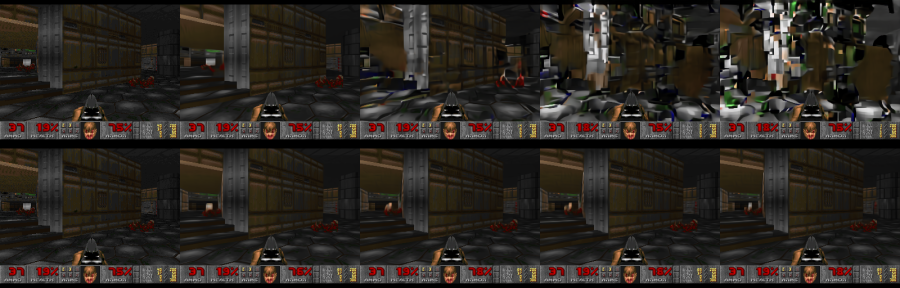

3.2.1 Mitigating Auto-Regressive Drift Using Noise Augmentation

The domain shift between training with teacher-forcing and auto-regressive sampling leads to error accumulation and fast degradation in sample quality, as demonstrated in Figure 4. To avoid this divergence due to auto-regressive application of the model, we corrupt context frames by adding a varying amount of Gaussian noise to encoded frames in training time, while providing the noise level as input to the model, following Ho et al. (2021). To that effect, we sample a noise level uniformly up to a maximal value, discretize it and learn an embedding for each bucket (see Figure 3). This allows the network to correct information sampled in previous frames, and is critical for preserving frame quality over time. During inference, the added noise level can be controlled to maximize quality, although we find that even with no added noise the results are significantly improved. We ablate the impact of this method in section 5.2.2.

3.2.2 Latent Decoder Fine-tuning

The pre-trained auto-encoder of Stable Diffusion v1.4, which compresses 8x8 pixel patches into 4 latent channels, results in meaningful artifacts when predicting game frames, which affect small details and particularly the bottom bar HUD (“heads up display”). To leverage the pre-trained knowledge while improving image quality, we train just the decoder of the latent auto-encoder using an MSE loss computed against the target frame pixels. It might be possible to improve quality even further using a perceptual loss such as LPIPS (Zhang et al. (2018)), which we leave to future work. Importantly, note that this fine-tuning process happens completely separately from the U-Net fine-tuning, and that notably the auto-regressive generation isn’t affected by it (we only condition auto-regressively on the latents, not the pixels). Appendix A.2 shows examples of generations with and without fine-tuning the auto-encoder.

3.3 Inference

3.3.1 Setup

We use DDIM sampling (Song et al., 2022). We employ Classifier-Free Guidance (Ho & Salimans, 2022) only for the past observations condition . We didn’t find guidance for the past actions condition to improve quality. The weight we use is relatively small (1.5) as larger weights create artifacts which increase due to our auto-regressive sampling.

We also experimented with generating 4 samples in parallel and combining the results, with the hope of preventing rare extreme predictions from being accepted and to reduce error accumulation. We experimented both with averaging the samples and with choosing the sample closest to the median. Averaging performed slightly worse than single frame, and choosing the closest to the median performed only negligibly better. Since both increase the hardware requirements to 4 TPUs, we opt to not use them, but note that this might be an interesting area for future work.

3.3.2 Denoiser Sampling Steps

During inference, we need to run both the U-Net denoiser (for a number of steps) and the auto-encoder. On our hardware configuration (a TPU-v5), a single denoiser step and an evaluation of the auto-encoder both takes 10ms. If we ran our model with a single denoiser step, the minimum total latency possible in our setup would be 20ms per frame, or 50 frames per second. Usually, generative diffusion models, such as Stable Diffusion, don’t produce high quality results with a single denoising step, and instead require dozens of sampling steps to generate a high-quality image. Surprisingly, we found that we can robustly simulate DOOM, with only 4 DDIM sampling steps (Song et al., 2020). In fact, we observe no degradation in simulation quality when using 4 sampling steps vs 20 steps or more (see Appendix A.4).

Using just 4 denoising steps leads to a total U-Net cost of 40ms (and total inference cost of 50ms, including the auto encoder) or 20 frames per second. We hypothesize that the negligible impact to quality with few steps in our case stems from a combination of: (1) a constrained images space, and (2) strong conditioning by the previous frames.

Since we do observe degradation when using just a single sampling step, we also experimented with model distillation similarly to (Yin et al., 2024; Wang et al., 2023) in the single-step setting. Distillation does help substantially there (allowing us to reach 50 FPS as above), but still comes at some cost to simulation quality, so we opt to use the 4-step version without distillation for our method (see Appendix A.4). This is an interesting area for further research.

We note that it is trivial to further increase the image generation rate substantially by parallelizing the generation of several frames on additional hardware, similarly to NVidia’s classic SLI Alternate Frame Rendering (AFR) technique. Similarly to AFR, the actual simulation rate would not increase and input lag would not reduce.

4 Experimental Setup

4.1 Agent Training

The agent model is trained using PPO (Schulman et al., 2017), with a simple CNN as the feature network, following Mnih et al. (2015). It is trained on CPU using the Stable Baselines 3 infrastructure (Raffin et al., 2021). The agent is provided with downscaled versions of the frame images and in-game map, each at resolution 160x120. The agent also has access to the last 32 actions it performed. The feature network computes a representation of size 512 for each image. PPO’s actor and critic are 2-layer MLP heads on top of a concatenation of the outputs of the image feature network and the sequence of past actions. We train the agent to play the game using the Vizdoom environment (Wydmuch et al., 2019). We run 8 games in parallel, each with a replay buffer size of 512, a discount factor , and an entropy coefficient of . In each iteration, the network is trained using a batch size of 64 for 10 epochs, with a learning rate of 1e-4. We perform a total of 10M environment steps.

4.2 Generative Model Training

We train all simulation models from a pretrained checkpoint of Stable Diffusion 1.4, unfreezing all U-Net parameters. We use a batch size of 128 and a constant learning rate of 2e-5, with the Adafactor optimizer without weight decay (Shazeer & Stern, 2018) and gradient clipping of 1.0. We change the diffusion loss parameterization to be v-prediction (Salimans & Ho 2022a). The context frames condition is dropped with probability 0.1 to allow CFG during inference. We train using 128 TPU-v5e devices with data parallelization. Unless noted otherwise, all results in the paper are after 700,000 training steps. For noise augmentation (Section 3.2.1), we use a maximal noise level of 0.7, with 10 embedding buckets. We use a batch size of 2,048 for optimizing the latent decoder; other training parameters are identical to those of the denoiser. For training data, we use all trajectories played by the agent during RL training as well as evaluation data during training, unless mentioned otherwise. Overall, we generate 900M frames for training. All image frames (during training, inference, and conditioning) are at a resolution of 320x240 padded to 320x256. We use a context length of 64 (i.e., the model is provided its own last 64 predictions as well as the last 64 actions).